Legit.Health Plus description and specifications

Manufacturer details

| Manufacturer data | |

|---|---|

| Legal manufacturer name | AI Labs Group S.L. |

| Address | Street Gran Vía 1, BAT Tower, 48001, Bilbao, Bizkaia (Spain) |

| SRN | ES-MF-000025345 |

| Person responsible for regulatory compliance | Alfonso Medela, Saray Ugidos |

| office@legit.health | |

| Phone | +34 638127476 |

| Trademark | Legit.Health |

Product characterization

| Information | |

|---|---|

| Device name | Legit.Health Plus (hereinafter, the device) |

| Model and type | NA |

| Version | 1.1.0.0 |

| Basic UDI-DI | 8437025550LegitCADx6X |

| Certificate number (if available) | MDR 792790 |

| EMDN code(s) | Z12040192 (General medicine diagnosis and monitoring instruments - Medical device software) |

| GMDN code | 65975 |

| Class | Class IIb |

| Classification rule | Rule 11 |

| Novel product (True/False) | FALSE |

| Novel related clinical procedure (True/False) | FALSE |

| SRN | ES-MF-000025345 |

Intended purpose

Intended use

The device is a computational software-only medical device intended to support health care providers in the assessment of skin structures, enhancing efficiency and accuracy of care delivery, by providing:

- quantification of intensity, count, extent of visible clinical signs

- interpretative distribution representation of possible International Classification of Diseases (ICD) categories.

Quantification of intensity, count and extent of visible clinical signs

The device provides quantifiable data on the intensity, count and extent of clinical signs such as erythema, desquamation, and induration, among others; including, but not limited to:

- erythema,

- desquamation,

- induration,

- crusting,

- xerosis (dryness),

- swelling (oedema),

- oozing,

- excoriation,

- lichenification,

- exudation,

- wound depth,

- wound border,

- undermining,

- hair loss,

- necrotic tissue,

- granulation tissue,

- epithelialization,

- nodule,

- papule

- pustule,

- cyst,

- comedone,

- abscess,

- draining tunnel,

- inflammatory lesion,

- exposed wound, bone and/or adjacent tissues,

- slough or biofilm,

- maceration,

- external material over the lesion,

- hypopigmentation or depigmentation,

- hyperpigmentation,

- scar,

- ictericia

Image-based recognition of visible ICD categories

The device is intended to provide an interpretative distribution representation of possible International Classification of Diseases (ICD) categories that might be represented in the pixels content of the image.

Device description

The device is a computational software-only medical device leveraging computer vision algorithms to process images of the epidermis, the dermis and its appendages, among other skin structures. Its principal function is to provide a wide range of clinical data from the analyzed images to assist healthcare practitioners in their clinical evaluations and allow healthcare provider organisations to gather data and improve their workflows.

The generated data is intended to aid healthcare practitioners and organizations in their clinical decision-making process, thus enhancing the efficiency and accuracy of care delivery.

The device should never be used to confirm a clinical diagnosis. On the contrary, its result is one element of the overall clinical assessment. Indeed, the device is designed to be used when a healthcare practitioner chooses to obtain additional information to consider a decision.

Intended medical indication

The device is indicated for use on images of visible skin structure abnormalities to support the assessment of all diseases of the skin incorporating conditions affecting the epidermis, its appendages (hair, hair follicle, sebaceous glands, apocrine sweat gland apparatus, eccrine sweat gland apparatus and nails) and associated mucous membranes (conjunctival, oral and genital), the dermis, the cutaneous vasculature and the subcutaneous tissue (subcutis).

Intended patient population

The device is intended for use on images of skin from patients presenting visible skin structure abnormalities, across all age groups, skin types, and demographics.

Intended user

The medical device is intended for use by healthcare providers to aid in the assessment of skin structures.

User qualification and competencies

In this section we specificy the specific qualifications and competencies needed for users of the device, to properly use the device, provided that they already belong to their professional category. In other words, when describing the qualifications of HCPs, it is assumed that healthcare professionals (HCPs) already have the qualifications and competencies native to their profession.

Healthcare professionals

No official qualifications are needes, but it is advisable if HCPs have some competencies:

- Knowledge on how to take images with smartphones.

IT professionals

IT professionals are responsible for the integration of the medical device into the healthcare organisation's system.

No specific official qualifications are needed, but it is advisable that IT professionals using the device have the following competencies:

- Basic knowledge of FHIR

- Understanding of the output of the device.

Use environment

The device is intended to be used in the setting of healthcare organisations and their IT departments, which commonly are situated inside hospitals or other clinical facilities.

The device is intended to be integrated into the healthcare organisation's system by IT professionals.

Operating principle

The device is computational medical tool leveraging computer vision algorithms to process images of the epidermis, the dermis and its appendages, among other skin structures.

Body structures

The device is intended to use on the epidermis, its appendages (hair, hair follicle, sebaceous glands, apocrine sweat gland apparatus, eccrine sweat gland apparatus and nails) and associated mucous membranes (conjunctival, oral and genital), the dermis, the cutaneous vasculature and the subcutaneous tissue (subcutis).

In fact, the device is intended to use on visible skin structures. As such, it can only quantify clinical signs that are visible, and distribute the probabilities across ICD categories that are visible.

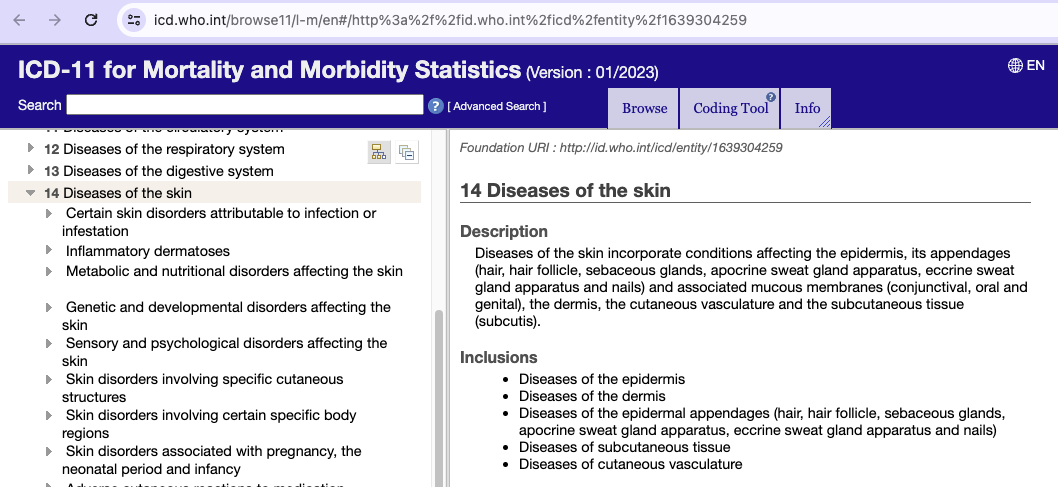

ICD categories

As mentioned, the image-based recognition processor provides an interpretative distribution of probabilities of visible ICD categories.

In other words, the device does not provide a true positive or true negative for any specific condition, like diagnostic tests. On the contrary, the device provides the full list of ICD categories and the probability distributed across all the categories.

Regarding the categories, as explained in the Body structures section, the device is intended to use on the epidermis, its appendages (hair, hair follicle, sebaceous glands, apocrine sweat gland apparatus, eccrine sweat gland apparatus and nails) and associated mucous membranes (conjunctival, oral and genital), the dermis, the cutaneous vasculature and the subcutaneous tissue (subcutis). The specific ICD categories covered in this definition are those pertaining to code 14 of the International Classification of Diseases.

More information regarding ICD categories can be found in the International Classification of Diseases 11th Revision.

Difference between multiclass-probability distribution and diagnosis

An issue that is frequently missunderstood is the nature of the output of the device, and one may wrongly compare it with devices whose output is fundamentally different.

To understand this correcty, let's remember that the device provides the following information to HCPs:

- quantifiable data on the intensity, count and extent of clinical signs such as erythema, desquamation, and induration, among others.

With this feature, people seem to correctly understand the nature of the output of the device.

- interpretative distribution representation of possible International Classification of Diseases (ICD) categories that might be represented in the pixels content of the image

This feature seems to create some confusion when not understood correctly.

In this regard, the main thing is that the device cannot not confirm the presence of a condition; it outputs the full array of ICD categories related to the skin, with a probabilistic distribution.

In other words, the device does not provide data only on whether a condition is or not present, but the full array of distributed probabilities across categories. This fundamentally different to diagnostic tests, such as COVID-19 testing kits, whose output is a true positive or a true negative.

The device serves as a valuable tool that provides quantitative data on clinical signs and an interpretative distribution of potential ICD categories. Moreover, the role of the device is to complement the expertise of HCPs, and it is impossible to use it with the intention of replacing the HCP. The diagnostic decision lies with the healthcare professional who combines their clinical knowledge, patient history, lab test and many other sources of information, including the device.

Keep in mind the fundamental difference between our device and a diagnostic test such as a COVID-19 testing kit or an HIV test, where the output is a positive or a negative diagnostic confirmation.

Our device does not provide a true/false output. It does not output a positive or negative result. On the contrary, our device allways provides a probabilistic distribution representation of possible categories.

| Device | Result type | Output |

|---|---|---|

| HIV test | True positive and true negative results | Boolean (TRUE/FALSE) |

| Legit.Health Plus | Probabilistic distribution | Array of ICD categories and distributed probabilities |

As such, clinicians may use this information to help them think about how to help their patients, but it is inherently impossible to use it as a confirmation test, like an HIV test or a COVID-19 test.

As previously mentioned, one of the outputs of the device is a probabilistic distribution of categories. This is fundamentally different from a diagnostic test. This is true regardlesss of how concentrated is the distribution of the probabilities.

To be more specific, there will be cases where the distribution is platykurtic; meaning that the probabilities will be distributed with flatness, instead of the probability being concentrated in a few categories. In other cases, the distribution may be leptokurtic, meaning that the probability may be more concentrated on a few ICD categories. Despite this being the case, the device's output remains a probabilistic distribution across all ICD categories.

The device's output acts as a supportive element, empowering HCPs to make informed decisions while ensuring that the diagnosis is not solely reliant on the device's probabilistic distribution but is a holistic assessment incorporating the expertise of the healthcare provider.

Contraindications

We advise not to use the device in:

- Skin structures located at a distance greater than 1 cm from the eye, beyond the optimal range for examination.

- Skin areas that are obscured from view, situated within skin folds or concealed in other manners, making them inaccessible for camera examination.

- Regions of the skin showcasing scars or fibrosis, indicative of past injuries or trauma.

- Skin structures exhibiting extensive damage, characterized by severe ulcerations or active bleeding.

- Skin structures contaminated with foreign substances, including but not limited to tattoos and creams.

- Skin structures situated at anatomically special sites, such as underneath the nails, requiring special attention.

- Portions of skin that are densely covered with hair, potentially obstructing the view and hindering examination.

Alerts and warnings

In case of observing an incorrect operation of the device, notify us as soon as possible. You can use the email support@legit.health. We, as manufacturers, will proceed accordingly. Any serious incident should be reported to us, as well as to the national competent authority of the country.

It is not known or foreseen any undesirable side-effects specifically related to the use of the software.

Variants and models

The device does not have any variants.

Expected lifetime

We consider safe and effective use through the useful life for the device's entire lifecycle, and in terms for SOTA in accordance with the Medical Device Regulation 2017/745 and available guidance.

Following the essential considerations for the lifecycle of medical device software (MDSW) laid out by the European Association of Medical devices Notified Bodies(Team-NB-PositionPaper-Lifetime-2023) we have determined an expected operational lifetime that is in accordance with the software nature of the device and the components and platforms used.

Device Lifetime

The expected operational lifetime of the device is established at 5 years, which is subject to regular software updates and the lifecycle of the integrated components and platforms. The lifetime will be increase in equivalent spans as the design and development continues and maintenance and re-design activities are carried out.

This timeline accounts for the expected evolution of the underlying operating systems and tools, the progression of medical device technology, and the necessary update cycles to maintain security and operability.

Software Change Management

The software will undergo regular updates to enhance functionality, address issues, and incorporate improvements based on post-market data and user feedback. These updates are part of our Software Change Management Plan defined in the procedure GP-023 Change control management.

Operating System and Tool Obsolescence

We have a proactive strategy for managing the end of life of operating systems and tools that our device relies on, ensuring continuity and compliance. This plan is detailed in document GP-019 Software validation plan.

SOUP Management

Changes and obsolescence of Software of Unknown Provenance (SOUP) are actively monitored and managed to mitigate risks associated with security and obsolescence. In the document GP-012 Design, Redesign and Development, and more specifically in the section SOUP management, we outline a monitoring plan for the SOUPs used in the device.

Security and Compatibility

Maintenance of security, compatibility, and operability is an ongoing commitment to ensure the device remain safe for intended use. Device compatibility and interoperability are inherently guaranteed, as one of the software requirements (REQ_006) of the DHF dictates that the structure of the data exchanged with the API must follow the FHIR standard.

When it comes to the security of the device, the primary interface available is the web API, which brings cybersecurity into focus. The procedure titled SP-012-002 Cybersecurity and Transparency Requirements outlines the necessary guidelines for development, deployment, and ongoing management to mitigate cybersecurity threats.

Qualifications and competencies needed by users

In this section we specificy the specific qualifications and competencies needed for users of the device, to properly use the device, provided that they already belong to their professional category. In other words, when describing the qualifications of HCPs, this allready asumes that HCPs allready have the qualifications and competencies native to their profession.

IT Technicians

No specific official qualifications are needed, but it is advisable if IT Technicians using the device have some competencies:

- Basic knowledge of FHIR

- Understanding of the output of the device

The IFU helps IT Technicians in this topic, adding links to the documentation of FHIR and adding the identity of the involved FHIR resource in the output of the device. Also, the IFU include a section called output that precisely covers this topic. Furthermore, the IFU even explain some workflows that IT technicians may implement, offering examples on how the output could be used.

HCPs

No official qualifications are needes, but it is advisable if HCPs have some competencies:

- Knowledge on how to take images with smartphones

The IFU helps HCPs in this topic, explaning tips on how to take pictures.

Clinical benefits

- Comprehensive Dermatological Data for Informed Clinical Decisions. Comprehensive Analysis for Informed Decision Making. Improvement in Dermatological Assessment Accuracy. Precise Detection of Dermatological Features. Empowerment of Health Care Practitioners.

- Faster Measurement of Clinical Signs. Accurate and Objective Measurement of Clinical Signs. Precise Quantification, Count and Extent measure of Skin Issues. Facilitation of Longitudinal Skin Condition Monitoring. Consistent Tracking of Patient Condition Over Time. More agile follow-up consultations.

- Improved Operational Efficiency for Healthcare Organizations. Streamlining Healthcare Operations. Data-Driven Insights for Workflow Optimization.

- Support in Preliminary ICD Classification through Image Analysis. Aiding in ICD Classification. Diagnostic Support.

Justification that is a medical device

According to the definition of medical device established in Article 2 of Chapter I of the Medical Device Regulation (MDR) 2017/745, the device is an instrument, apparatus, appliance, software, implant, reagent, material or other article intended by the manufacturer to be used, alone or in combination, for human beings for one or more of the following specific medical purposes:

- diagnosis, prevention, monitoring, prediction, prognosis, treatment or alleviation of disease,

- diagnosis, monitoring, treatment, alleviation of, or compensation for, an injury or disability,

- investigation, replacement or modification of the anatomy or of a physiological or pathological process or state,

- providing information by means of in vitro examination of specimens derived from the human body, including organ, blood and tissue donations, and which does not achieve its principal intended action by pharmacological, immunological or metabolic means, in or on the human body, but which may be assisted in its function by such means.

MDR 2017/745 classification according to risks

In order to find the appropiate risk class for the device according to Medical Device Regulation (MDR) 2017/745, we focus on the following content:

ANNEX VIII: Classification rules

Chapter I: Definitions specific to classification rules

First, we must understand the definitions of the terms.

According to the Chapter I of the Annex VIII of the Medical Device Regulation (MDR) 2017/745, the following definitions apply to the medical device:

| # | DEFINITIONS SPECIFIC TO CLASSIFICATION RULES |

|---|---|

| 1 | DURATION OF USE |

1.1 | 'Transient' means normally intended for continuous use for less than 60 minutes. |

| 1.2 | 'Short term' means normally intended for continuous use for between 60 minutes and 30 days. |

| 1.3 | 'Long term' means normally intended for continuous use for more than 30 days. |

| 2 | INVASIVE AND ACTIVE DEVICES |

| 2.1 | 'Body orifice' means any natural opening in the body, as well as the external surface of the eyeball, or any permanent artificial opening, such as a stoma. |

| 2.2 | 'Surgically invasive device' means: (a) an invasive device which penetrates inside the body through the surface of the body, including through mucous membranes of body orifices with the aid or in the context of a surgical operation; and (b) a device which produces penetration other than through a body orifice. |

| 2.3 | 'Reusable surgical instrument' means an instrument intended for surgical use in cutting, drilling, sawing, scratching, scraping, clamping, retracting, clipping or similar procedures, without a connection to an active device and which is intended by the manufacturer to be reused after appropriate procedures such as cleaning, disinfection and sterilisation have been carried out. |

| 2.4 | 'Active therapeutic device' means any active device used, whether alone or in combination with other devices, to support, modify, replace or restore biological functions or structures with a view to treatment or alleviation of an illness, injury or disability. |

2.5 | 'Active device intended for diagnosis and monitoring' means any active device used, whether alone or in combination with other devices, to supply information for detecting, diagnosing, monitoring or treating physiological conditions, states of health, illnesses or congenital deformities. |

| 2.6 | 'Central circulatory system' means the following blood vessels: arteriae pulmonales, aorta ascendens, arcus aortae, aorta descendens to the bifurcatio aortae, arteriae coronariae, arteria carotis communis, arteria carotis externa, arteria carotis interna, arteriae cerebrales, truncus brachiocephalicus, venae cordis, venae pulmonales, vena cava superior and vena cava inferior. |

| 2.7 | 'Central nervous system' means the brain, meninges and spinal cord. |

| 2.8 | 'Injured skin or mucous membrane' means an area of skin or a mucous membrane presenting a pathological change or change following disease or a wound. |

Chapter III: Classification rules

Now that we know the definitions, it's evident that we are a non-invasive device, and also an active device. Now we can go ahead and find the right class by looking at which rules applies best to our case.

Following the classification rules established in Chapter III, we see that indeed our device is best characterised by Rule 11.

First, we look at 4. Non-invasive devices, and we see that:

Rule 1 All non-invasive devices are classified as class I, unless one of the rules set out hereinafter applies.

So we keep reading, and we get to 6. Active devices. There we discard Rule 9 and Rule 10, because none of them describe our device. However, Rule 11 is representative of the device.

Rule 11

Here's what Rule 11 says:

Software intended to provide information which is used to take decisions with diagnosis or therapeutic purposes is classified as class IIa, except if such decisions have an impact that may cause:

- death or an irreversible deterioration of a person's state of health, in which case it is in class III; or

- a serious deterioration of a person's state of health or a surgical intervention, in which case it is classified as class IIb.

Software intended to monitor physiological processes is classified as class IIa, except if it is intended for monitoring of vital physiological parameters, where the nature of variations of those parameters is such that it could result in immediate danger to the patient, in which case it is classified as class IIb. All other software is classified as class I.

Guideline MDCG 2019-11

To help us determine more clearly whether we are class IIa or class IIb according to Rule 11, we also classify the device with the help of guidance MDCG 2019-11 on Qualification and Classification of Software in MDR 2017/745.

Extracted from Annex III - Usability of the IMDRF risk classification framework in the context of the MDR, of

| High Treats or Diagnoses IMDRF 5.1.1 | Medium Drives Clinical Management IMDRF 5.1.2 | Low Informs Clinical Management (everything else) | |

|---|---|---|---|

| Critical IMDRF 5.2.1 | Class III Category IV.i | Class IIb Category III.i | Class IIa II.i |

| Serious IMDRF 5.2.2 | Class IIb Category III.ii | Class IIa III.ii | Class IIa II.ii |

| Non-serious (everything else) | Class IIa Category II.iii | Class IIa II.iii | Class IIa I.ii |

According to the guidance, classification is determined upon two aspects:

- Columns: the significance of information provided by the medical device software to a healthcare situation related to diagnosis or therapy

- Rows: The effect over the state of healthcare situation or patient condition

According to the rationale of Guidance MDCG 2019-11, the risk classification for the device is Class IIa.

In the following two sections, we explain in detail why each is the right fit for the device.

Significance of information: medium

The significance of information is medium. The reason is that the device Drives clinical management, but does not treat nor diagnose. This can be understood by looking at the document Software as a Medical Device: Possible Framework for Risk Categorization and Corresponding Considerations authored by the IMDRF Software as a Medical Device (SaMD) Working Group.

Driving clinical management infers that the information provided by the SaMD will be used to aid in treatment, aid in diagnoses, to triage or identify early signs of a disease or condition will be used to guide next diagnostics or next treatment interventions:

- To aid in treatment by providing enhanced support to safe and effective use of medicinal products or a medical device.

- To aid in diagnosis by analyzing relevant information to help predict risk of a disease or condition or as an aid to making a definitive diagnosis.

- To triage or identify early signs of a disease or conditions.

That description fit very well with the intended purpose of the device. Thus, the significance of information is medium.

Effect over patient health: serious

The effect over the state of healthcare situation or patient condition is serious. This can be understood by looking at the document Software as a Medical Device: Possible Framework for Risk Categorization and Corresponding Considerations authored by the IMDRF Software as a Medical Device (SaMD) Working Group.

Here's the definition for Serious situation or condition:

Situations or conditions where accurate diagnosis or treatment is of vital importance to avoid unnecessary interventions (e.g., biopsy) or timely interventions are important to mitigate long term irreversible consequences on an individual patient's health condition or public health. SaMD is considered to be used in a serious situation or condition when:

- The type of disease or condition is:

- Moderate in progression, often curable,

- Does not require major therapeutic interventions,

- Intervention is normally not expected to be time critical in order to avoid death, longterm disability or other serious deterioration of health, whereby providing the user an ability to detect erroneous recommendations.

- Intended target population is NOT fragile with respect to the disease or condition.

- Intended for either specialized trained users or lay users.

That description fits very well with the intended purpose of the device. Let's remember that the devide is used to provide the following information to HCPs:

- Providing quantifiable data on the intensity, count and extent of clinical signs such as erythema, desquamation, and induration, among others. In this regard, the device does provide specific scoring for the clinical signs. However, none of the clinical signs are serious nor life-treating.

- Providing an interpretative distribution representation of possible International Classification of Diseases (ICD) categories that might be represented in the pixels content of the image. In this regard, the device does not provide SPECIFIC information about a single condition; it outputs the array of ICD categories related to the skin, with an interpretative distribution. Among these clases, there may be one class that can be tied to a life-treatning condition, but the device does never provide data only on a life-treatening condition, but the wide range of distributed probabilities across categories.

Keep in mind the fundamental difference between our device and a diagnostic test such as a COVID-19 testing kit or an HIV test, where the output is a positive or a negative diagnostic confirmation. Our device does not provide a true/false output. It does not output a positive or negative result. On the contrary, our device allways provides an interpretative distribution representation of possible International Classification of Diseases (ICD) categories that might be represented in the pixels content of the image.

| Device | Result type | Output |

|---|---|---|

| HIV test | True positive and true negative results | Boolean (TRUE/FALSE) |

| Legit.Health Plus | Interpretative distribution | Array of ICD categories and distributed probabilities |

With that in mind, let's check one by one the requirements specified in IMDRF 5.2.2

| Requirement for IMDRF 5.2.2 | Applicable | Clarification |

|---|---|---|

| Condition is moderate in progression, often curable | TRUE | Across all the ICD categories, all of them are curable and moderate in progression. |

| Condition does not require major therapeutic interventions | TRUE | Across all the ICD categories, none of them requires major therapeutic interventions. |

| Intervention is normally not expected to be time critical to avoid serious outcomes, providing the user an ability to detect erroneous recommendations | TRUE | Across all the ICD categories, only one is time-critical. In other words, only 0,03% of categories are time-critical, but most importantly: the device's purpose is not to determine whether or not that one time-critical condition is present (positive / negative), but to provide an distributions of all ICD categories. |

| Intended target population is not fragile with respect to the disease or condition. | TRUE | Target population is not fragile |

| Intended for either specialized trained users or lay users | TRUE | Intended for specialized trained users |

For that reason, and keeping in mind the intended use of the device, the effect over patient health is serious, but not critical.

Novel features

The device is the result of an incremental improvement of an existing technology. It has been developed to improve the current state of the art to process photographs of skin structure and then processes them with artificial intelligence algorithms.

Image-based recognition of visible ICD categories

One core feature of the device is a deep learning-based image recognition technology for the recognition of ICD categories. In other words: when the device is fed an image or a set of images, it outputs an interpretative distribution representation of possible International Classification of Diseases (ICD) categories that might be represented in the pixels content of the image.

The device makes its prediction entirely based on the visual content of the images, with no additional parameters.

The device has been developed following an architecture called Vision Transformer (ViT). This architecture is inspired in the Transformer architecture, which is extensively used in other areas such as NLP and has brought significant advancements in terms of performance.

A more detailed explanation of this architecture can be found in the publication "An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale" (Dosovitskyi et al., 2020).

Data collection, model training and validation

Dataset description

In order to develop the image recognition processor, we created an image dataset by collecting images from diverse sources. To achieve this, we collaborated with medical centers, including IDEI, Hospital Universitario de Cruces, Hospital Universitario de Basurto, Centro de Salud Sodupe-Güeñes, Centro de Salud Balmaseda, Centro de Salud Buruaga, Centro de Salud Zurbaran, DermoMedic, Hospital Universitario Ramón y Cajal and the group Ribera Salud, which provided images with confirmed ICD categories. We also included the most relevant and reputabke skin image datasets, which included the confirmed and verified diagnosis for every image. We also This combination of sources resulted in a dataset with a total size of 181591 RGB images, with an average image size of 756x1048 pixels, near to 1000 different categories, and a diverse representation of age, sex and phototype.

The images and metadata collected or generated in clinical validations at medical centers are always included in our test sets. This enables us to analyse performance on unseen real-world data.

With the goal of incorporating the widest range of image qualities, we deliberately diversified the sources accessed to create the dataset. As a result, the dataset presents a high variability in terms of image quality. More specifically: the dataset varies both in acquisition settings from one source to another (i.e. different imaging devices, lighting, focus, distance...) and also in how images are coded (i.e. JPEG compression, PNG format...). This does not present a difficulty because there is no inherent impossibility to achieve a wide variety of image qualities.

Likewise, we set out to achieve a high level of divertisy regarding age, sex, and phototypes. In this regard, it is crucial to understand that some ICD categories and some clinical signs have different problational incidence. Unlike the issue of image qualities, there is a inherent impossibility to achieve an evenly distributed dataset across all ages, sexes and phototypes. For instance, pediatric issues affect children dispropotionately. Due to this, it is inherently impossible to achieve an equal representation of pediatric issues across all ages. Likewise, issues in the the vulva affect women disproportionally. The literature points at an uneven distribution of vaginas in the population, which introduces a layer of complexity into the task of achieving an equitable representation of issues in the vulva across all demographic groups. In some categories, the disproportion is less inherently impossible to solve, such as categories adjacent to acne that are not exclusive of a certain age range, but do incide in certain demographics more often.

The prevalence of some skin conditions has been reported to be higher in specific populations. In other words, ICD categories do not manifest uniformly across demographics such as skin types, sex, or age. As dermatology atlases contain common examples of issues they are likely to be frequent in certain demographic groups. Thus, the chances of capturing such conditions in other populations is much lower, requiring more active efforts to compensate this issue.

References:

- Prevalence of most common skin diseases in Europe: a population - based study

- Prevalence of skin disease in a population - based sample of adults from five European countries

- Skin Diseases in Family Medicine: Prevalence and Health Care Use

- Dermatological diseases in the geriatric age group: Retrospective analysis of 7092 patients

- Distribution of childhood skin diseases according to age and gender, a single institution experience

Likewise, in the context of phototypes, certain clinical signs are inherently less visible in higher phototypes due to the presence of the dark pigment melanin. Pigments are substances that absorb certain wavelengths of light and reflect others. Melanin, a predominant pigment in the human epidermis, is crucial in this context.

Melanin, particularly eumelanin, is responsible for darker phototypes. It has a high capacity to absorb a wide range of light wavelengths. This absorption significantly impacts the perception of colors applied on such surfaces.

This is true specially when referring to clinical signs that rely on chromatic difference, such as redness. Chromatic difference refers to the contrast between the color of an object and its background. This contrast is crucial in color perception. Due to the reflectance properties of the epidermal surface of people with high phototypes providing a much lower chromatic difference, it is inherently impossible to use pigment-rich surfaces to train a neural network tasked with detecting chromatic clinical signs like redness.

Color perception in human vision is inherently tied to the physics of light: when light hits an object, some of it is absorbed while the rest is reflected. The wavelengths of the reflected light determine the color we perceive. This interaction is significantly influenced by the surface's reflectance properties. This is why surfaces with lower light reflectance produce wavelenghts with less chromatic variation. On the other hand, surfaces with high reflectance reflect most of the light. This is why lighter surfaces generated a greater chromatic difference.

In a similar way, the density of epidermal melanin also makes patients more resistant to UV radiation-induced molecular damage, which in turn affects the incidence of certain ICD categories and inherently make it disproportionatelly prevalent in certain phototypes.

Indeed, melanin absorbs between 50% and 75% of UV radiation. The epidemiological data shows that there exists an inverse correlation between skin pigmentation and the incidence of sun-induced pigmentations. Subjects with low phototypes are approximately 70 times more likely to develop certain skin issues.

References:

For all these inherent reasons, it impossible to achieve an even distribution of all photoypes across all clases and clinical signs.

To understand the diversity of sex, age and phototypes across our dataset, we conducted several analyses on subsets for which such metadata was available.

- In terms of sex, the representation of male and female subjects is balanced (51% and 48%).

- The most represented age group is 50-75 years, followed by 75+ years, 25-50 years, and 0-25 years (59%, 20%, 18%, 3%).

- The distribution of skin types in the dataset is relatively balanced, though there is a noticeable increase in the prevalence of Type 2, and a corresponding decrease in Type 6.

Once again, it is critical to acknowledge that the distribution of ICD-11 categories varies across these groups, which inherently contributes to appropiate disparities in dataset distribution. This, however, does not inherently indicate the presence of bias, as it is an unavoidable consequence of the prevalence of skin conditions.

Another consequence of building this dataset from many online sources is that each source followed a different taxonomy to name the ICD category observed in the images. This required a thorough revision of all the terms observed in the dataset to arrive to a curated list of usable ICD categories. This process is carried out in collaboration with a expert medical specialists.

Firstly, we standardize all strings to ensure consistency in capitalization, aligning them with the naming conventions outlined in the ICD. Typically, this means capitalizing only the first letter of each word, with exceptions for pathologies named after individuals. For instance, Generalised pustular psoriasis (EA90.40) follows this pattern, whereas variations like Generalised Pustular Psoriasis or generalised pustular psoriasis exhibit inconsistencies.

Next, we address typographical errors, although these are less common. Examples might include Generalised pustular soriasis or Generalised pustule psoriasis which we correct to the proper spelling.

Subsequently, we identify and handle acronyms such as GPP frequently utilized in clinical practice and reflected in various data sources. This stage underscores the necessity of collaboration with healthcare professionals, particularly in the final phase of linking taxonomy to ICD codes, as the relationship between them isn't always straightforward.

For example, distinguishing between Hand psoriasis and Elbow psoriasis doesn't yield distinct entities within the ICD taxonomy. However, categories like Nail psoriasis (EA90.51) or Scalp psoriasis (EA90.50) are clearly delineated and have their own coding inside the ICD taxonomy. This underscores the importance of expert input to ensure accurate classification.

Upon completing these steps, we generate a curated list encompassing all relevant ICD categories, providing a comprehensive and standardized resource.

Annotation

The basic piece of information required to train our image recognition model is the ICD category assigned to every image. However, in order to gain better understanding of the data, the dataset is under an annotation process to obtain the following labels:

- Location of the affected skin structure: By drawing one or more bounding boxes, the annotator indicates where exactly the affected skin structure can be seen in the image.

- Image domain: The annotator must indicate whether the image is clinical, dermoscopic, histology, skin (but not dermatology), or out of the task's domain.

- Visible body parts: The annotator must select all the body parts that can be seen in the image. The options are: top of the head, scalp, back of the head, face, mouth, tongue, ear, eye, nose, neck, back, trunk-chest-abdomen, arm, armpit, hand, hand nail, buttock, genitals (groin, penis, vulva, anus), leg, knee, foot, foot nail, and close-up image (no body part is visible).

Due to the size of the dataset, the annotation process is still undergoing. Thanks to periodical revisions of the annotation, it is possible to add the location of the affected skin structure to model training, whereas the other labels (image domain and visible body parts) will become valuable for error analysis once the annotation is completed.

One of the most common data augmentation techniques in computer vision is random cropping, which consists of feeding a model with a NxM window randomly extracted from the input image. This reduces overfitting as the model is always presented slightly different views of every input image. In our scenario, however, using random cropping may result in unusable views that do not really contain the object of interest (i.e. the affected skin structure).

By using the annotated affected skin structure bounding boxes, we direct the random cropping procedure and ensure the random crops are always drawn from areas of the image than contain the relevant information.

Partitioning

One crucial step of the development is splitting the dataset into three groups:

- Training sets

- Validation sets

- Test sets

When an incoming image dataset includes any sort of metadata that makes it possible to group images by subject, the data is split at subject level. This strategy improves the reliability of the validation and test metrics, and it is a best practice in the field.

Thanks to such a large collection of datasets, it is also possible to perform reliable validations by reserving some of these datasets entirely and exclusively for testing, which helps explore and analyze the performance of the model in completely uncontrolled scenarios. The performance of the model on these test-only sources is commonly used to assess whether a new iteration of the image recognition model has improved its performance or not.

To ensure the model has enough images per category, it is only trained on those with a sufficient number of images. We set the category sample size threshold on 25 images, which reduced the list of 1000 ICD-11 categories to 239.

Pre-processing

The images do not undergo any pre-processing step prior to training. During the training stage, the images go through a variety of data augmentation pipelines to increase variance and reduce overfitting. However, in the validation and test stages the images are not modified at all, only resized to the model's input size.

Model calibration

Image recognition models are known for becoming overly confident after training, which, applied to this scenario, can deter the overall ICD classification performance. If the device predicts the wrong probabilities and sets an extremely high probability for an incorrect class, the answer may lead the user to believe the model is confident about the answer.

To overcome this problem, a final step has been added to the training process, which consists of slightly fitting the model to the validation set, applying temperature scaling. By applying this additional postprocessing, the device is enforced to generate 'softer' or less extreme probability distributions.

The main benefit of model calibration is that it increases the interpretability of the results, as models not only need to be accurate (i.e. the correct class should be within the top 3 or 5 categories with the highest probability in the distribution) but also indicate how likely it is that the output is not correct. When the model is fed with an image and the output is a very soft distribution, it means that the model is not confident enough about any particular ICD category.

A more detailed explanation can be found in the publication "On calibration of modern neural networks" (Guo et al., 2017).

Test-time augmentation (TTA)

In addition to using a state-of-the-art architecture, we apply test-time augmentation (TTA) to obtain a more stable output. This technique consists of applying slight distortions to the input image (such as changing the contrast and brightness, rotating the image or equalizing the histogram of the image), feeding the model with the input image and all its distorted views. This results in one probability distributions per image. Finally, all the probability distributions are averaged to obtain the final output.

Quantification of intensity, count and extent of visible clinical signs

Another core feature of the device is to provide a quantifiable data on the intensity, count and extent of clinical signs such as erythema, desquamation, and induration, among others.

To achieve that, the device uses a range of deep learning technologies, combined and developed for that specific use. Here's a list of the technologies used:

- Object detection: used to count clinical signs such as hives, papules or nodules.

- Semantic segmentation: used to determine the extent of clinical signs such as hair loss or erythema.

- Image recognition: used to quantity the intensity of visual clinical signs like erythema, excoriation, dryness, lichenification, oozing, and edema.

Data collection, model training and validation

The device comprises several object detection models that are trained for a specific task. For each model, a basic dataset is constructed by taking the images of the desired ICD category from the main image recognition dataset.

Furthermore, the augmentation of these datasets is possible by incorporating additional images, including those depicting healthy skin, with the primary objective of mitigating false positives. The meticulous annotation of these images, ensuring consistency in diagnosing healthy skin and specifying additional elements such as body site or domain, is carried out in the preceding stage of image-based recognition of visible ICD categories. A detailed explanation of this annotation process can be found in the dedicated section.

This approach yields various subsets comprising specific images tailored for distinct tasks, each undergoing a precise annotation process aligned with the requirements of the task at hand.

Annotation

We have collected a variety of labels depending on the task and visible sign:

- For object detection models, we annotated bounding boxes in the YOLO format. A bounding box is a set of coordinates that locate an object of interest inside a rectangle.

- For semantic segmentation, we annotated polygons that enclose the areas of an image that contain the clinical sign.

- For image recognition, we tagged the images as one or more categories depending on the clinical signs observed in the images and their severity or intensity.

For more information about our approach to quantification of intensity, count and extent of visible clinical signs, please read our papers:

- Automatic SCOring of Atopic Dermatitis Using Deep Learning: A Pilot Study (Medela et al., 2022). DOI: 10.1016/j.xjidi.2022.100107

- Automatic International Hidradenitis Suppurativa Severity Score System (AIHS4): A novel tool to assess the severity of hidradenitis suppurativa using artificial intelligence (Hernández Montilla et al., 2023). DOI: 10.1111/srt.13357

- Automatic Urticaria Activity Score (AUAS): Deep Learning-based Automatic Hive Counting for Urticaria Severity Assessment (Mac Carthy et al., 2023). DOI: 10.1016/j.xjidi.2023.100218

Image quality assessment

All the aforementioned deep learning models are expected to be used with images that contain actual skin structure captured with at least some level of visual quality. In order words, an input image must meet a minimum standard in terms of photography-related parameters such as exposure, focus, contrast or resolution. If these standards are not met when the user submits an image, there is the risk that the models produce suboptimal predictions.

In order to ensure the optimal visual quality and clinical utility of the images, we use a different deep learning technology, that we called DIQA (Dermatology Image Quality Assessment).

DIQA is used to assess the perceived visual quality of every single image before it being processed by the device. By using this feature, it is possible to spot images that are unsuitable for the intended use, and therefore guarantee that only the best images are utilized. The integration of DIQA with other deep learning models is streamlined and straightforward. DIQA operates as an initial step, preceding the activation of any subsequent deep learning models. Importantly, the output generated by DIQA does not influence or interact with these subsequent models in any manner. The models that follow are executed based on the user's specific request.

For more information, read DIQA's research letter:

Dermatology Image Quality Assessment (DIQA): Artificial intelligence to ensure the clinical utility of images for remote consultations and clinical trials (Hernández-Montilla et al., 2023). DOI: 10.1016/j.jaad.2022.11.002.

To train this model, a dataset of skin images was assessed by a large crowd of human observers, following the ITU-T P.910 Recommendations, which were tasked to review every image and assign a quality score that ranged from 0 (the worst quality) to 10 (the best quality). The model, based on the EfficientNet architecture, was then trained using the average score of every image as labels.

The strength of EfficientNet lies in its balanced scaling of depth, width, and resolution, optimizing model efficiency. This compound scaling approach ensures superior performance with fewer parameters, enabling effective resource utilization for diverse computer vision tasks.

For more information, read the original EfficientNet publication, EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks

Data collection, model training and validation

The image quality assessment (IQA) model was trained, validated and tested using a combination of several third-party IQA datasets. Additionally, we collected 1,944 dermatology images and created a custom IQA dataset focused on the dermatology domain.

The third-party IQA datasets of collections of images rated by large groups of observers. As the goal of IQA is to detect both good and bad-quality images, these datasets comprise images with high variance in terms of visual quality. The images were purposely taken under many acquisition settings (imaging device, lighting, focus, contrast...), which makes IQA models more robust.

Annotation

In each dataset, each observer rated every image assigning a perceived visual quality score (e.g. in a scale from 1 to 10, where 10 is excellent visual quality). This results in every image having N scores, which are then averaged to obtain the Mean Opinion Score (MOS).

Similarly, we collected a set of dermatology images and asked a board of 40 observers to rate every image, and then obtained their corresponding MOS. By adding this dermatology-only dataset to training, we ensure that DIQA is capable of transferring the knowledge obtained from the other IQA datasets to the dermatology domain.

Image domain check

In some cases, the user may accidentally upload an image that, no matter the visual quality, does not represent skin structure. Examples of such out-of-domain images are photographs of other organs, out-of-scope body structures, printed reports, screenshots of medical records, a picture of a surgical tool, or more extreme examples such as a picture of a dog or a truck.

To prevent the misuse of the device, it incorporates a deep learning model for image domain check. It consists of a lightweight image recognition model (a tiny ViT) trained to classify an input image as one of these possible types:

- Clinical

- Dermoscopic

- Out-of-domain

Thanks to the low complexity of this task (i.e. it is easy to distinguish between a dermatology image and a natural image), it is possible to train a model that is not computationally expensive (1.1 GMACs), yet with a high performance (89% top1 accuracy in ImageNet dataset), which adds an extra level of input quality assurance without any cost in terms of overall processing time.

Thanks to the image domain check feature, any image that is not suitable for the expected use of the device (i.e. the out-of-domain category) will be detected and excluded from further processing.

Data collection, model training and validation

To train, validate and test this model, we created an image dataset by combining our image disease recognition dataset and other that are not related to dermatology, such as ImageNet (with the exclusion of the person category), MS-COCO 2017 (with the exclusion of person instances), Google's Cartoon Set, and the Textures dataset. The images could be easily labelled as clinical, dermoscopic, and out-of-domain (i.e. non-dermatology).

Technical features

Features

API REST

Our device is built as an API that follows the REST protocol.

This protocol totally separates the user interface from the server and the data storage. Thanks to this, REST API always adapts to the type of syntax or platforms that the user may use, which gives considerable freedom and autonomy to the user. With a REST API, the user can use either PHP, Java, Python or Node.js servers. The only thing is that it is indispensable that the responses to the requests should always take place in the language used for the information exchange: JSON.

OpenAPI Specification

Our medical device includes an OpenAPI Specification.

OpenAPI Specification (formerly known as Swagger Specification) is an API description format for REST APIs. An OpenAPI file allows you to describe a entire API, including:

- Available endpoints and operations on each endpoint (GET, POST)

- Operation parameters Input and output for each operation

- Authentication methods

- Contact information, license, terms of use and other information.

This means that our API itself has embeded specifications that help the user undertand the type of values that are transmitted by the API.

HL7 FHIR@

FHIR is a standard for health care data exchange, published by HL7®. FHIR is suitable for use in a wide variety of contexts: mobile phone apps, cloud communications, EHR-based data sharing, server communication in large institutional healthcare providers, and much more.

FHIR solves many challenges of data interoperability by defining a simple framework for sharing data between systems.

Accessories

Primary accesories

Primary accesories are the components that interact directly with the device. These can be known by the manufacturer. They are also required to interact with the device.

The device is used through an API (Application Programming Interface). This means that the interface is coded, and used programatically, without a user interface.

In other words: the device is used server-to-server, by computer programs. Thus, no accesory is user directly in interaction with the device.

Secondary accesories

Secondary accesories are the components that may interact indirectly with the device. These are developed and mantained independently by the user, and the manufacturer has no visibility as to their identity or operating principles. They are also optional and not required to interact with the device.

The device may also be used indirectly through applications, such as the care provider's Electronic Health Records (EHR). The EHR is the software system that stores patients' data: medical and family history, laboratory and other test results, prescribed medications history, and more. This is developed and mantained independently of us, and may be used to indirectly interact with the device.

The patients and healthcare providers may use image capture devices to take photos of skin structures. In this regards, the minimim requirement is a 12 MP camera.

Performance attributes of the device

The relevant performance attributes are:

| Metric | Value |

|---|---|

| Weight | 33 kilobytes |

| Average response time | 1400 miliseconds |

| Maximum requests per second | no limit |

| Service availability time slot | The service is available at all times |

| Service availability rate during its working slot (in % per month) | 100% |

| Maximum application recovery time in the event of a failure (RTO/AIMD) | 6 hours |

| Maximum data loss in the event of a fault (none, current transaction, day, week, etc.) (RPO/PDMA) | None |

| Maximum response time to a transaction | 10 seconds |

| Backup device (software, hardware) | Software (AWS S3) |

| Backup frequency | 12 hours |

| Backup modality | Incremental |

| Recomended dimensions of images sent | 10,000px2 |

Using the device

The device is architected to seamlessly integrate with other software platforms. Primarily designed as an Application Programming Interface (API), it allows healthcare organizations to establish a real-time connection between their native systems, such as Electronic Medical Records (EMR) systems, and the device. This ensures that images can be sent from the EMR and clinical data from the device can be received and stored back into the EMR in real time.

Our instructions for use can be found at Legit.Health Plus_IFU, and contain detailed and helpful information to help our client integrate the device into their systems.

Integration methods

To use the device our clients will need to involve an IT team. Their IT team will create an integration between whichever system the client uses, and our device. This integration will allow non-technical users, such as HCPs or their patients, to indirectly interact with our device. In other words: the client will develop a user-friendly interface, and connect that to our device via API programmatically.

As such, it is up to the client and their IT team to decide how they develop the interface, and what method of integration they use to communicate with our device. And that is why it is so important that we assist the client, to guide them in what method of integration may best match their needs.

To simplify the issue, we have divided the documentation into three logical groups. These three groups represent some of the most common integrations that clients have used in the past. The methods are:

- JSON-only

- Deep link

- Iframe

These 3 integration methods are simply a collection of technical combinations and resources that clients have used. However, they can be combined, or mixed, depending on the needs of the customer. In other words: these three groups are not actually different methods, but rather a combination of programming techniques that, in combination, turn out to match the needs of most of our customers.

JSON-only

We call JSON-only the situation in which a customer decides to develop an integration that communicates with the device in the most basic and unadorned way.

In short, this usually consists of the server of the customer sending a very simple POST request with an image, and getting back a JSON file with the raw data output by the device.

There are pros and cons to this method, and we only recommend it if the customer is very tech-savvy and very used to developing integrations and user interfaces. As explained below, the customer is welcome to make use of our pre-designed interfaces and SDKs, but they usually don't rely much on them because they have very specific branding or interface needs, and they have extensive technical expertise.

Deep link

We call Deep link the situation wherein customers decide to develop an integration that relies heavily on the pre-designed interfaces and SDKs that we put at their disposal.

In short, as well as the image of the affected skin structure, the customer's system also sends data about the users to whom the image belongs. By doing so, the device can output data in a way that two images of the same patient can be related. This means that the device can provide data in a time series, that reflects the evolution of patients. Likewise, the data of the patient is linked to the HCP who uploaded it. All of this helps the customer keep data organised in their own system because it makes the output more verbose and therefore more explanatory.

It is also common, in this situation, that customers also rely on user interfaces that we put at their disposal. The purpose of this pre-designed interface is simply to help customers show the data outputted by the device in a way that is more useful and understandable.

Iframe

We call Iframe the situation wherein customers decide to develop an integration that sends a minimal amount of data, and shows the output based on a very small pre-designed interface. This is a very minimal integration, that is useful when customers only use our device for a small interaction inside their whole clinical workflow.

In short, this situation occurs when customers embed into their system an iframe with the report outputted by the device. It is advantageous in cases where customers want to make use of some pre-designed interfaces, but don't want to send data to the device, except for the image.

Pre-existing interfaces

To help customers in the process of displaying the data outputted by the device, we put at their disposal some interfaces. The purpose of these interfaces is to shorten the development times and reduce the development efforts of customers.

Both the Iframe and the Deep link situations rely upon the use of these pre-existing interfaces, that customers can, of course, modify and customise.

Record signature meaning

- Author: JD-017 Alejandro Carmena

- Review: JD-003 Taig Mac Carthy

- Approve: JD-005 Alfonso Medela