R-TF-028-005 AI Development Report

Table of contents

- Introduction

- Data Management

- Model Development and Validation

- ICD Category Distribution and Binary Indicators

- Erythema Intensity Quantification

- Desquamation Intensity Quantification

- Induration Intensity Quantification

- Pustule Intensity Quantification

- Crusting Intensity Quantification

- Xerosis Intensity Quantification

- Swelling Intensity Quantification

- Oozing Intensity Quantification

- Excoriation Intensity Quantification

- Lichenification Intensity Quantification

- Wound Characteristic Assessment

- Inflammatory Nodular Lesion Quantification

- Acneiform Lesion Type Quantification

- Hair Follicle Quantification

- Acneiform Inflammatory Lesion Quantification

- Hive Lesion Quantification

- Body Surface Segmentation

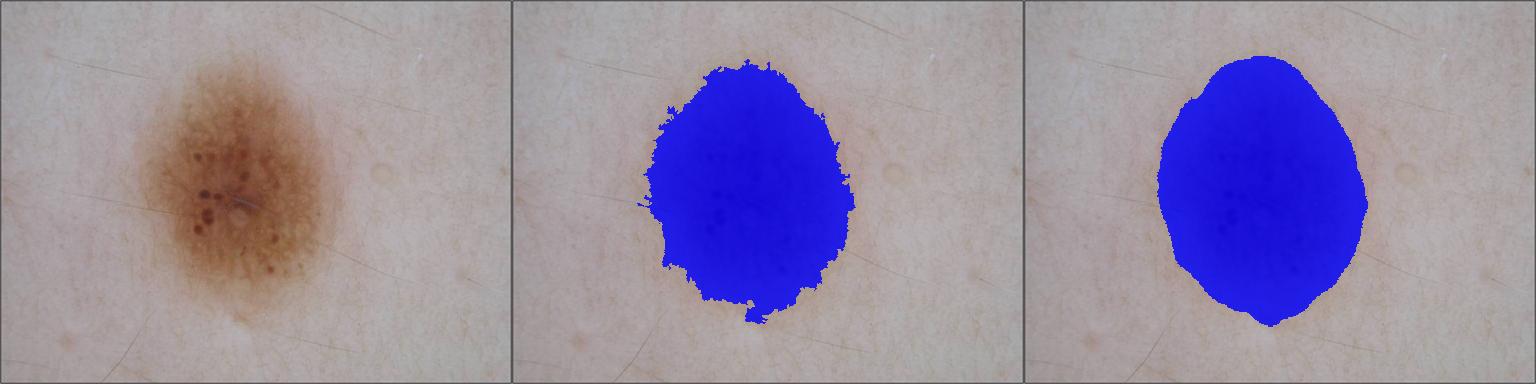

- Wound Surface Quantification

- Erythema Surface Quantification

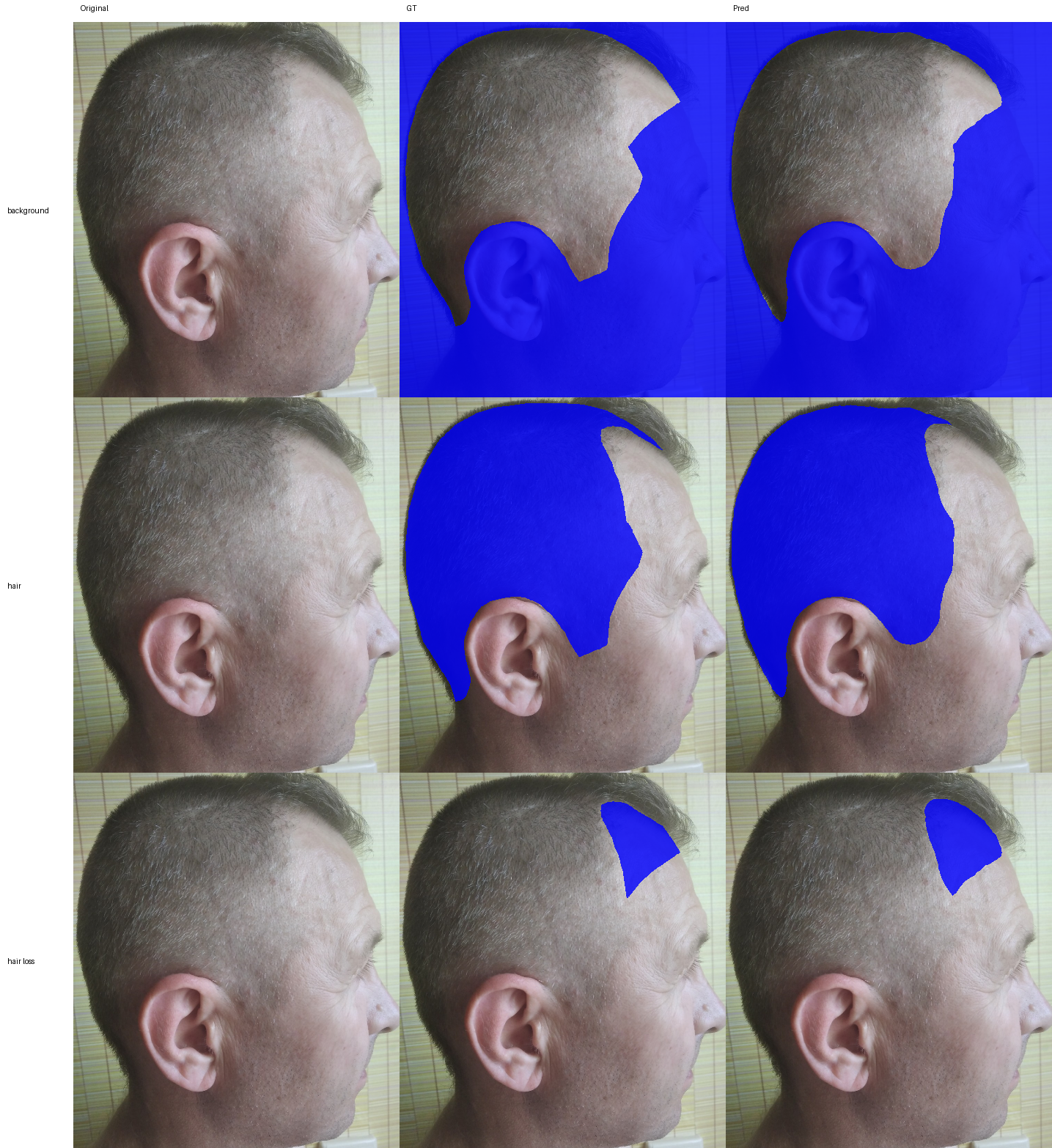

- Hair Loss Surface Quantification

- Nail Lesion Surface Quantification

- Hypopigmentation or Depigmentation Surface Quantification

- Hyperpigmentation Surface Quantification

- Skin Surface Segmentation

- Follicular and Inflammatory Pattern Identification

- Inflammatory Nodular Lesion Pattern Identification

- Dermatology Image Quality Assessment (DIQA)

- Domain Validation

- Head Detection

- Summary and Conclusion

- State of the Art Compliance and Development Lifecycle

- Integration Verification Package

- Purpose

- Package Location and Structure

- Package Contents Per Model

- Acceptance Criteria

- Model-Specific Package Details

- Clinical Models - ICD Classification and Binary Indicators

- Clinical Models - Visual Sign Intensity Quantification

- Clinical Models - Wound Characteristic Assessment

- Clinical Models - Lesion Quantification

- Clinical Models - Surface Area Quantification

- Clinical Models - Pattern Identification

- Non-Clinical Models

- Verification Procedure for Software Integration Team

- Traceability

- AI Risks Assessment Report

- Related Documents

Introduction

Context

This report documents the development, verification, and validation of the AI algorithm package for the Legit.Health Plus medical device. The development process was conducted in accordance with the procedures outlined in GP-028 AI Development and followed the methodologies specified in the R-TF-028-002 AI Development Plan.

The algorithms are designed as offline (static) models. They were trained on a fixed dataset prior to release and do not adapt or learn from new data after deployment. This ensures predictable and consistent performance in the clinical environment.

Algorithms Description

The Legit.Health Plus device incorporates 59 AI models that work together to fulfill the device's intended purpose. A comprehensive description of all models, their clinical objectives, and performance specifications is provided in R-TF-028-001 AI/ML Description.

The AI algorithm package includes:

Clinical Models (directly fulfilling the intended purpose - 54 models):

- ICD Category Distribution and Binary Indicators (1 model): Provides interpretative distribution of ICD-11 categories.

- Visual Sign Intensity Quantification Models (10 models): Quantify the intensity of clinical signs including erythema, desquamation, induration, pustule, crusting, xerosis, swelling, oozing, excoriation, and lichenification.

- Wound Characteristic Assessment (24 models): Evaluates wound tissue types, characteristics, exudate types, and perilesional conditions.

- Lesion Quantification Models (5 models):

- Inflammatory Nodular Lesion Quantification

- Acneiform Lesion Type Quantification

- Inflammatory Lesion Quantification

- Hive Lesion Quantification

- Hair Follicle Quantification

- Surface Area Quantification Models (12 models):

- Erythema Surface Quantification

- Wound Bed Surface Quantification

- Angiogenesis and Granulation Tissue Surface Quantification

- Biofilm and Slough Surface Quantification

- Necrosis Surface Quantification

- Maceration Surface Quantification

- Orthopedic Material Surface Quantification

- Bone, Cartilage, or Tendon Surface Quantification

- Hair Loss Surface Quantification

- Nail Lesion Surface Quantification

- Hypopigmentation or Depigmentation Surface Quantification

- Hyperpigmentation Surface Quantification

- Pattern Identification Models (2 models):

- Follicular and Inflammatory Pattern Identification

- Inflammatory Pattern Identification

Non-Clinical Models (supporting proper functioning - 5 models):

- Domain Validation: Verifies images are within the validated, dermatology domain.

- Dermatology Image Quality Assessment (DIQA): Ensures image quality is suitable for analysis.

- Skin Surface Segmentation: Identifies skin regions for analysis.

- Body Surface Segmentation: Segments body surface for BSA calculations.

- Head Detection: Localizes heads for privacy and counting workflows.

Total: 54 Clinical Models + 5 Non-Clinical Models = 59 Models

This report focuses on the development methodology, data management processes, and validation results for all models. Each model shares a common data foundation but may require specific annotation procedures as detailed in the respective data annotation instructions.

AI Standalone Evaluation Objectives

The standalone validation aimed to confirm that all AI models meet their predefined performance criteria as outlined in R-TF-028-001 AI/ML Description.

Performance specifications and success criteria vary by model type and are detailed in the individual model sections of this report. All models were evaluated on independent, held-out test sets that were not used during training or model selection.

Data Management

Overview

The development of all AI models in the Legit.Health Plus device relies on a comprehensive dataset compiled from multiple sources and annotated through a multi-stage process. This section describes the general data management workflow that applies to all models, including collection, foundational annotation (ICD-11 mapping), and partitioning. Model-specific annotation procedures are detailed in the individual model sections.

Data Collection

The dataset was compiled from multiple distinct sources:

- Archive Data: Images sourced from reputable online sources and private institutions, as detailed in

R-TF-028-003 Data Collection Instructions - Archive Data. - Custom Gathered Data: Images collected under formal protocols at clinical sites, as detailed in

R-TF-028-003 Data Collection Instructions - Custom Gathered Data.

This combined approach resulted in a comprehensive dataset covering diverse demographic characteristics (age, sex, Fitzpatrick skin types I-VI), anatomical sites, imaging conditions, and pathological conditions.

Dataset summary:

| Item | Value |

|---|---|

| Total ICD-11 categories | 850 |

| Total images | 280342 |

| Images of FST-1 | 89225 (31.83%) |

| Images of FST-2 | 91349 (32.58%) |

| Images of FST-3 | 59610 (21.26%) |

| Images of FST-4 | 23466 (8.37%) |

| Images of FST-5 | 11914 (4.25%) |

| Images of FST-6 | 4778 (1.70%) |

| Images of female | 52857 (18.85%) |

| Images of male | 55334 (19.74%) |

| Images of unspecified sex | 172151 (61.41%) |

| Images of Pediatric | 12829 (4.58%) |

| Images of Adult | 52694 (18.80%) |

| Images of Geriatric | 28350 (10.11%) |

| Images of unspecified age | 186469 (66.51%) |

| ID | Dataset Name | Type | Description | ICD-11 Mapping | Crops | Diff. Dx | Sex | Age |

|---|---|---|---|---|---|---|---|---|

| 1 | Torrejon-HCP-diverse-conditions | Multiple | Dataset of skin images by physicians with good photographic skills | ✓ Yes | Varies | ✓ | ✓ | ✓ |

| 2 | Abdominal-skin | Archive | Small dataset of abdominal pictures with segmentation masks for `Non-specific lesion` class | ✗ No | Yes (programmatic) | — | — | — |

| 3 | Basurto-Cruces-Melanoma | Custom gathered | Clinical validation study dataset (`MC EVCDAO 2019`) | ✓ Yes | Yes (in-house crops) | — | ✓ | ✓ |

| 4 | BI-GPP (batch 1) | Archive | Small set of GPP images from Boehringer Ingelheim (first batch) | ✓ Yes | No | — | — | — |

| 5 | BI-GPP (batch 2) | Archive | Large dataset of GPP images from Boehringer Ingelheim (second batch) | ✓ Yes | Yes (programmatic) | — | ✓ | ✓ |

| 6 | Chiesa-dataset | Archive | Sample of head and neck lesions (Medela et al., 2024) | ✓ Yes | Yes (in-house crops) | — | ◐ | ◐ |

| 7 | Figaro 1K | Archive | Hair style classification and segmentation dataset, repurposed for `Non-specific finding` | ✗ No | Yes (in-house crops) | — | — | — |

| 8 | Hand Gesture Recognition (HGR) | Archive | Small dataset of hands repurposed for non-specific images | ✗ No | Yes (programmatic) | — | — | — |

| 9 | IDEI 2024 (pigmented) | Archive | Prospective and retrospective studies at IDEI (DERMATIA project), pigmented lesions only | ✓ Yes | Yes (programmatic) | — | ✓ | ◐ |

| 10 | Manises-HS | Archive | Large collection of hidradenitis suppurativa images | ✗ No | Not yet | — | ✓ | ✓ |

| 11 | Nails segmentation | Archive | Small nail segmentation dataset repurposed for `non-specific lesion` | ✗ No | Yes (programmatic) | — | — | — |

| 12 | Non-specific lesion V2 | Archive | Small representative collection repurposed for `non-specific lesion` | ✗ No | Yes (programmatic) | — | — | — |

| 13 | Osakidetza-derivation | Archive | Clinical validation study dataset (`DAO Derivación O 2022`) | ✓ Yes | Yes (in-house crops) | ◐ | ✓ | ✓ |

| 14 | Ribera ulcers | Archive | Collection of ulcer images from Ribera Salud | ✗ No | Yes (from wound masks, not all) | — | — | — |

| 15 | Transient Biometrics Nails V1 | Archive | Biometric dataset of nail images | ✗ No | Yes (programmatic) | — | — | — |

| 16 | Transient Biometrics Nails V2 | Archive | Biometric dataset of nail images | ✗ No | No (close-ups) | — | — | — |

| 17 | WoundsDB | Archive | Small chronic wounds database | ✓ Yes | No | — | ✓ | ◐ |

| 18 | Clinica Dermatologica Internacional - Acne | Custom gathered | Compilation of images from CDI's acne patients with IGA labels | ✓ Yes | No | — | — | — |

| 19 | Manises-DX | Archive | Large collection of images of different skin conditions | ✓ Yes | Not yet | — | — | — |

Total datasets: 55 | With ICD-11 mapping: 41

Legend: ✓ = Yes | ◐ = Partial/Pending | — = No

Foundational Annotation: ICD-11 Mapping

Before any model-specific training could begin, all clinical labels across all data sources were standardized to the ICD-11 classification system. This foundational annotation step is required for all models and is detailed in R-TF-028-004 Data Annotation Instructions - ICD-11 Mapping.

The ICD-11 mapping process involved:

- Label Extraction: Extracting all unique clinical labels from each data source

- Standardization: Mapping source-specific labels (abbreviations, alternative spellings, legacy coding systems) to standardized ICD-11 categories

- Clinical Validation: Expert dermatologist review and validation of all mappings

- Visible Category Consolidation: Grouping ICD-11 codes that cannot be reliably distinguished based on visual features alone into unified "Visible ICD-11" categories. To handle images with no visible skin conditions (i.e. "clear" skin), a new

Non-specific findingcategory was created, being the only category that does not have an associated ICD-11 code.

This standardization ensures:

- Consistent reference standard across all data sources.

- Clinical validity and regulatory compliance (ICD-11 is the WHO standard).

- Proper handling of visually similar conditions that require additional clinical information for differentiation.

- A unified clinical vocabulary for the ICD Category Distribution model and all other clinical models.

Model Development and Validation

This section details the development, training, and validation of all AI models in the Legit.Health Plus device. Each model subsection includes:

- Model-specific data annotation requirements

- Training methodology and architecture

- Performance evaluation results

- Bias analysis and fairness considerations

ICD Category Distribution and Binary Indicators

Model Overview

Reference: R-TF-028-001 AI/ML Description - ICD Category Distribution and Binary Indicators section

The ICD Category Distribution model is a deep learning classifier that outputs a probability distribution across ICD-11 disease categories. The Binary Indicators are derived from this distribution using an expert-curated mapping matrix.

Models included:

- ICD Category Distribution (outputs top-5 conditions with probabilities)

- Binary Indicators (6 derived indicators):

- Malignant

- Pre-malignant

- Associated with malignancy

- Pigmented lesion

- Urgent referral (≤48h)

- High-priority referral (≤2 weeks)

Data Requirements and Annotation

Foundational annotation: ICD-11 mapping (as described in R-TF-028-004 Data Annotation Instructions - ICD-11 Mapping)

Binary Indicator Mapping: A dermatologist-validated mapping matrix was created to link each ICD-11 category to the six binary indicators. This mapping defines which disease categories contribute to each indicator (e.g., melanoma, squamous cell carcinoma, and basal cell carcinoma all contribute to the "Malignant" indicator). A complete explanation of Binary Indicator Mapping can be found in R-TF-028-004 Data Annotation Instructions - Binary Indicator Mapping.

The result of the foundational annotation and binary indicator mapping is LegitHealth-DX, which presents high variability in terms of category frequency (i.e. some categories have more images than others). For each category, we split their corresponding images into a training, a validation, and a test set.

In addition to the ICD-11 and binary indicator mapping, we conducted an extra annotation step by identifying the skin condition in the image. This was done by drawing one or more drawing boxes to enclose the visible skin condition in the image. This extra step was motivated by the use of random cropping during data augmentation. Although it is a commonly used trick to increase training diversity, in this scenario there would be a high risk of cropping areas of the image that do not correspond to the actual skin condition, leading to unreliable model learning. By using these manually annotated boxes, we ensure that random areas are picked from one or more of these annotated boxes.

Finally, to ensure a reliable performance for the ICD Category Distribution and Binary Indicator model, we only used the classes from LegitHealth-DX that contain more than 3 images in all splits (training/validation/test).

Dataset statistics:

| Item | Value |

|---|---|

| Total ICD-11 categories | 850 |

| Total images | 280342 |

| Clinical images | 194186 (69.27%) |

| Dermoscopic images | 86156 (30.73%) |

| Selected ICD-11 categories | 346 |

| Selected total images | 277415 (98.96%) |

| Images with annotated ROIs | 81451 (29.05%) |

| Training images | 193686 (69.09%) |

| Validation images | 48047 (17.14%) |

| Test images | 35726 (12.74%) |

Training Methodology

Pre-processing:

- Data augmentation during training: bounding-box guided transformations (random erasing, random cropping), random rotations, color jittering, Gaussian noise, random Gaussian and motion blur, and histogram equalization (CLAHE). We also simulated some domain-specific artifacts (dermoscopy shadows, ruler marks, and color patches) to reduce their effect on the training process.

- In all stages (training/validation/test), images were resized to 384x384 to fit the model's input requirements.

Architecture: ConvNext-V2 (base), with transfer learning from large-scale pre-trained weights. This was chosen as the best performing architecture after comparing a baseline ResNet-50 to different architectures, namely: EfficientNet-V1, EfficientNet-V2, ConvNext-V2, ViT, and DenseNet.

Training:

- Optimizer: AdamW

- Loss function: Cross-entropy

- Learning rate: the optimal learning rate is determined by an automatic range test as proposed in Cyclical Learning Rates for Training Neural Networks (Smith, 2015). We then use a one-cycle policy for faster convergence.

- Training duration: 50 epochs

Post-processing:

- Temperature scaling for probability calibration, as described in On Calibration of Modern Neural Networks (Guo et al., 2017)

- Test-time augmentation (TTA) for robust predictions: at inference time, the test image is augmented via rotation, horizontal and vertical flipping, and histogram equalization, and the predictions of the original image and its augmented views are aggregated to provide a final output.

Performance Results

ICD Category Distribution Performance:

| Metric | Result | Success criterion | Outcome |

|---|---|---|---|

| Top-1 accuracy | 0.6579 (95% CI: [0.6535 - 0.6625]) | >= 0.50 | PASS |

| Top-3 accuracy | 0.8208 (95% CI: [0.8171 - 0.8247]) | >= 0.60 | PASS |

| Top-5 accuracy | 0.8644 (95% CI: [0.8611 - 0.8679]) | >= 0.70 | PASS |

Binary Indicator Performance:

| Indicator | Result | Success criterion | Outcome |

|---|---|---|---|

| AUC Malignant | 0.9180 (95% CI: [0.9136 - 0.9223]) | >= 0.80 | PASS |

| AUC Pre-malignant | 0.8781 (95% CI: [0.8721 - 0.8839]) | >= 0.80 | PASS |

| AUC Associated to malignancy | 0.8626 (95% CI: [0.8553 - 0.8696]) | >= 0.80 | PASS |

| AUC Is a pigmented lesion | 0.9590 (95% CI: [0.9566 - 0.9615]) | >= 0.80 | PASS |

| AUC Urgent referral | 0.8999 (95% CI: [0.8891 - 0.9105]) | >= 0.80 | PASS |

| AUC High-priority referral | 0.8876 (95% CI: [0.8838 - 0.8915]) | >= 0.80 | PASS |

Verification and Validation Protocol

Test Design:

- Held-out test set sequestered from training and validation

- Stratified sampling to ensure representation across ICD-11 categories

- Independent evaluation on external datasets, with special focus on skin tone diversity

Complete Test Protocol:

- Input: RGB images from the test set

- Output: ICD-11 probability distribution and binary indicator scores

- Reference standard comparison: Manually labeled ICD-11 categories and binary indicator mappings

- Statistical analysis: Top-k accuracy, AUC-ROC with 95% confidence intervals

Data Analysis Methods:

- Top-k accuracy calculation with bootstrapping (1000 runs) for confidence intervals

- ROC curve analysis and AUC calculation for binary indicators with bootstrap confidence intervals (1000 runs)

Test Conclusions:

- The model met all success criteria, demonstrating reliable performance for both skin disease recognition and binary indicator prediction.

Bias Analysis and Fairness Evaluation

Objective: Evaluate model performance across demographic subpopulations to identify and mitigate potential biases that could affect clinical safety and effectiveness.

Subpopulation Analysis Protocol:

1. Fitzpatrick Skin Type Analysis:

- Performance metrics (Top-k accuracy, AUC) disaggregated by Fitzpatrick types I-II, II-IV, and V-VI

- Datasets: images from the hold-out test set with Fitzpatrick skin type annotations

2. Age Group Analysis:

- Stratification: Pediatric (under 18 years), Adult (18-65 years), Elderly (over 65 years)

- Metrics: Top-k accuracy and AUC per age group

- Data sources: images from the hold-out test set with age metadata

3. Sex/Gender Analysis:

- Metrics: Top-k accuracy and AUC per sex group

- Data sources: images from the hold-out test set with sex metadata

4. Image type analysis:

- Performance metrics (Top-k accuracy, AUC) disaggregated by image type (clinical and dermoscopy)

- Data sources: images from the hold-out test set (grouped by image type metadata)

Bias Mitigation Strategies:

- Multi-source data collection ensuring visual diversity (demographics, acquisition settings, etc)

- Fitzpatrick skin type identification for bias monitoring

- Data augmentation targeting underrepresented subgroups

- Clinical validation with diverse patient populations

Results Summary:

1. Fitzpatrick Skin Type Analysis:

| Metric | overall | fst: I-II | fst: III-IV | fst: V-VI |

|---|---|---|---|---|

| Top-1 accuracy | 0.6579 (95% CI: [0.6535 - 0.6625]) | 0.6855 (95% CI: [0.6799 - 0.6911]) | 0.6146 (95% CI: [0.6056 - 0.6237]) | 0.5350 (95% CI: [0.5135 - 0.5566]) |

| Top-3 accuracy | 0.8208 (95% CI: [0.8171 - 0.8247]) | 0.8501 (95% CI: [0.8459 - 0.8546]) | 0.7740 (95% CI: [0.7655 - 0.7818]) | 0.6937 (95% CI: [0.6737 - 0.7142]) |

| Top-5 accuracy | 0.8644 (95% CI: [0.8611 - 0.8679]) | 0.8912 (95% CI: [0.8874 - 0.8950]) | 0.8221 (95% CI: [0.8146 - 0.8295]) | 0.7457 (95% CI: [0.7260 - 0.7654]) |

| AUC Malignant | 0.9180 (95% CI: [0.9136 - 0.9223]) | 0.9180 (95% CI: [0.9129 - 0.9227]) | 0.9194 (95% CI: [0.9101 - 0.9280]) | 0.8364 (95% CI: [0.7937 - 0.8771]) |

| AUC Pre-malignant | 0.8781 (95% CI: [0.8721 - 0.8839]) | 0.8820 (95% CI: [0.8746 - 0.8892]) | 0.8786 (95% CI: [0.8676 - 0.8900]) | 0.8011 (95% CI: [0.7631 - 0.8399]) |

| AUC Associated to malignancy | 0.8626 (95% CI: [0.8553 - 0.8696]) | 0.8622 (95% CI: [0.8537 - 0.8703]) | 0.8646 (95% CI: [0.8498 - 0.8791]) | 0.8579 (95% CI: [0.8261 - 0.8858]) |

| AUC Is a pigmented lesion | 0.9590 (95% CI: [0.9566 - 0.9615]) | 0.9594 (95% CI: [0.9557 - 0.9629]) | 0.9441 (95% CI: [0.9395 - 0.9488]) | 0.9059 (95% CI: [0.8874 - 0.9239]) |

| AUC Urgent referral | 0.8999 (95% CI: [0.8891 - 0.9105]) | 0.9129 (95% CI: [0.8987 - 0.9256]) | 0.8843 (95% CI: [0.8684 - 0.9000]) | 0.8268 (95% CI: [0.7847 - 0.8648]) |

| AUC High-priority referral | 0.8876 (95% CI: [0.8838 - 0.8915]) | 0.8900 (95% CI: [0.8851 - 0.8947]) | 0.8834 (95% CI: [0.8760 - 0.8907]) | 0.8546 (95% CI: [0.8330 - 0.8768]) |

2. Age Group Analysis:

| Metric | overall | age: 1-Pediatric | age: 2-Adult | age: 3-Geriatric |

|---|---|---|---|---|

| Top-1 accuracy | 0.6579 (95% CI: [0.6535 - 0.6625]) | 0.8764 (95% CI: [0.8635 - 0.8895]) | 0.7104 (95% CI: [0.7017 - 0.7199]) | 0.6244 (95% CI: [0.6103 - 0.6371]) |

| Top-3 accuracy | 0.8208 (95% CI: [0.8171 - 0.8247]) | 0.9156 (95% CI: [0.9041 - 0.9262]) | 0.8583 (95% CI: [0.8517 - 0.8657]) | 0.8200 (95% CI: [0.8099 - 0.8297]) |

| Top-5 accuracy | 0.8644 (95% CI: [0.8611 - 0.8679]) | 0.9272 (95% CI: [0.9167 - 0.9375]) | 0.8980 (95% CI: [0.8922 - 0.9042]) | 0.8776 (95% CI: [0.8683 - 0.8864]) |

| AUC Malignant | 0.9180 (95% CI: [0.9136 - 0.9223]) | 0.7327 (95% CI: [0.5924 - 0.8706]) | 0.9104 (95% CI: [0.9022 - 0.9182]) | 0.8621 (95% CI: [0.8520 - 0.8726]) |

| AUC Pre-malignant | 0.8781 (95% CI: [0.8721 - 0.8839]) | 0.9729 (95% CI: [0.9358 - 0.9941]) | 0.8935 (95% CI: [0.8766 - 0.9093]) | 0.8023 (95% CI: [0.7813 - 0.8230]) |

| AUC Associated to malignancy | 0.8626 (95% CI: [0.8553 - 0.8696]) | 0.8142 (95% CI: [0.7204 - 0.8992]) | 0.8354 (95% CI: [0.8199 - 0.8499]) | 0.8368 (95% CI: [0.8228 - 0.8496]) |

| AUC Is a pigmented lesion | 0.9590 (95% CI: [0.9566 - 0.9615]) | 0.9913 (95% CI: [0.9835 - 0.9971]) | 0.9847 (95% CI: [0.9808 - 0.9883]) | 0.9087 (95% CI: [0.8871 - 0.9284]) |

| AUC Urgent referral | 0.8999 (95% CI: [0.8891 - 0.9105]) | 0.9628 (95% CI: [0.9281 - 0.9833]) | 0.9002 (95% CI: [0.8755 - 0.9236]) | 0.8882 (95% CI: [0.8400 - 0.9306]) |

| AUC High-priority referral | 0.8876 (95% CI: [0.8838 - 0.8915]) | 0.9334 (95% CI: [0.9037 - 0.9574]) | 0.8834 (95% CI: [0.8753 - 0.8915]) | 0.8525 (95% CI: [0.8416 - 0.8633]) |

3. Sex/Gender Analysis:

| Metric | overall | sex: 1-male | sex: 2-female |

|---|---|---|---|

| Top-1 accuracy | 0.6579 (95% CI: [0.6535 - 0.6625]) | 0.7195 (95% CI: [0.7111 - 0.7290]) | 0.7143 (95% CI: [0.7049 - 0.7239]) |

| Top-3 accuracy | 0.8208 (95% CI: [0.8171 - 0.8247]) | 0.8625 (95% CI: [0.8560 - 0.8694]) | 0.8591 (95% CI: [0.8518 - 0.8665]) |

| Top-5 accuracy | 0.8644 (95% CI: [0.8611 - 0.8679]) | 0.9024 (95% CI: [0.8966 - 0.9083]) | 0.8988 (95% CI: [0.8924 - 0.9050]) |

| AUC Malignant | 0.9180 (95% CI: [0.9136 - 0.9223]) | 0.9214 (95% CI: [0.9147 - 0.9276]) | 0.9152 (95% CI: [0.9077 - 0.9228]) |

| AUC Pre-malignant | 0.8781 (95% CI: [0.8721 - 0.8839]) | 0.8603 (95% CI: [0.8422 - 0.8777]) | 0.8973 (95% CI: [0.8828 - 0.9102]) |

| AUC Associated to malignancy | 0.8626 (95% CI: [0.8553 - 0.8696]) | 0.8606 (95% CI: [0.8477 - 0.8727]) | 0.8485 (95% CI: [0.8351 - 0.8611]) |

| AUC Is a pigmented lesion | 0.9590 (95% CI: [0.9566 - 0.9615]) | 0.9748 (95% CI: [0.9693 - 0.9802]) | 0.9871 (95% CI: [0.9839 - 0.9901]) |

| AUC Urgent referral | 0.8999 (95% CI: [0.8891 - 0.9105]) | 0.9149 (95% CI: [0.8855 - 0.9405]) | 0.8979 (95% CI: [0.8725 - 0.9231]) |

| AUC High-priority referral | 0.8876 (95% CI: [0.8838 - 0.8915]) | 0.9087 (95% CI: [0.9019 - 0.9153]) | 0.8915 (95% CI: [0.8839 - 0.8993]) |

4. Image type Analysis:

| Metric | overall | image-type: clinical | image-type: dermoscopic |

|---|---|---|---|

| Top-1 accuracy | 0.6579 (95% CI: [0.6535 - 0.6625]) | 0.5985 (95% CI: [0.5923 - 0.6048]) | 0.7579 (95% CI: [0.7508 - 0.7648]) |

| Top-3 accuracy | 0.8208 (95% CI: [0.8171 - 0.8247]) | 0.7662 (95% CI: [0.7610 - 0.7717]) | 0.9126 (95% CI: [0.9078 - 0.9173]) |

| Top-5 accuracy | 0.8644 (95% CI: [0.8611 - 0.8679]) | 0.8173 (95% CI: [0.8126 - 0.8222]) | 0.9437 (95% CI: [0.9396 - 0.9473]) |

| AUC Malignant | 0.9180 (95% CI: [0.9136 - 0.9223]) | 0.9240 (95% CI: [0.9179 - 0.9301]) | 0.9079 (95% CI: [0.9015 - 0.9139]) |

| AUC Pre-malignant | 0.8781 (95% CI: [0.8721 - 0.8839]) | 0.8814 (95% CI: [0.8737 - 0.8889]) | 0.8733 (95% CI: [0.8626 - 0.8840]) |

| AUC Associated to malignancy | 0.8626 (95% CI: [0.8553 - 0.8696]) | 0.8636 (95% CI: [0.8545 - 0.8730]) | 0.8625 (95% CI: [0.8516 - 0.8723]) |

| AUC Is a pigmented lesion | 0.9590 (95% CI: [0.9566 - 0.9615]) | 0.9420 (95% CI: [0.9389 - 0.9451]) | 0.8170 (95% CI: [0.7745 - 0.8543]) |

| AUC Urgent referral | 0.8999 (95% CI: [0.8891 - 0.9105]) | 0.8798 (95% CI: [0.8690 - 0.8905]) | 0.8214 (95% CI: [0.7242 - 0.9133]) |

| AUC High-priority referral | 0.8876 (95% CI: [0.8838 - 0.8915]) | 0.8878 (95% CI: [0.8827 - 0.8927]) | 0.8842 (95% CI: [0.8777 - 0.8909]) |

Bias Analysis Conclusion:

- In terms of image type, the model meets the expected performance goals, showing a remarkably exceptional performance on dermoscopy images.

- The model meets the performance goals for all age groups, with exceptional classification performance on pediatric subjects. Binary indicator prediction performance is excellent for all age groups.

- The model meets the performance goals for all sexes, showing almost identical performance for both male and female subjects.

- In terms of Fitzpatrick skin types, the model meets the performance goals for binary indicator prediction for all skin tones. When it comes to ICD-11 condition classification, all performance thresholds are met, but the model shows a slightly degraded performance for dark skin tones (FST V-VI).

Erythema Intensity Quantification

Model Overview

Reference: R-TF-028-001 AI/ML Description - Erythema Intensity Quantification section

This model quantifies erythema (redness) intensity on an ordinal scale (0-9), outputting a probability distribution that is converted to a continuous severity score via weighted expected value calculation.

Clinical Significance: Erythema is a cardinal sign of inflammation in numerous dermatological conditions including psoriasis, atopic dermatitis, and other inflammatory dermatoses.

Data Requirements and Annotation

Model-specific annotation: Erythema intensity scoring (R-TF-028-004 Data Annotation Instructions - Visual Signs)

Medical experts (dermatologists) annotated images with erythema intensity scores following standardized clinical scoring protocols (e.g., Clinician's Erythema Assessment scale). Annotations include:

- Ordinal intensity scores (0-9): 0=none, 9=maximum

- Multi-annotator consensus for reference standard establishment (minimum 2-3 dermatologists per image)

Dataset statistics:

- Images with erythema annotations: 5557

- Training set: 90% of the erythema images plus 10% of healthy skin images

- Validation set: 10% of the erythema images

- Test set: 10% of the erythema images

- Annotations variability:

- Mean RMAE: 0.172

- 95% CI: [0.154, 0.191]

- Conditions represented: Psoriasis, atopic dermatitis, rosacea, eczema, contact dermatitis, hidradentitis suppurativa, etc.

Training Methodology

Architecture: EfficientNet-B2, a convolutional neural network optimized for image classification tasks with a final layer adapted for a 10-class output (scores 0-9).

- Transfer learning from pre-trained weights (ImageNet)

- Input size: RGB images at 272 pixels resolution

Other architectures and resolutions were evaluated during model selection, with EfficientNet-B2 at 272x272 pixels providing the best balance of performance and computational efficiency. Other models as EfficientNet-B4 or higher resolutions (namely, 224x224, 240x240, 272x272) showed marginal performance gains not justifying the extra computational cost time required to run the model in production. Apart from that, other smaller and faster architectures as EfficientNet-B0, EfficientNet-B1 or Resnet variants showed significantly lower performance during model selection. Vision Transformer architectures were also evaluated, showing lower performance likely due to the limited dataset size for this specific task.

Training approach:

- Pre-processing: Normalization of input images to standard mean and std of the ImageNet dataset. Other normalizations were evaluated during model selection, with ImageNet normalization providing the best performance.

- Data augmentation: Rotations, mirroring, color jittering, cropping, zoom-out, brightness/contrast adjustments, blur. The global color changes introduced by some augmentations (e.g., color jittering, brightness/contrast adjustments) were carefully tuned to avoid altering the visual appearance. A global augmentation intensity was evaluated to reduce overfitting while preserving the clinical sign characteristics and model performance.

- Data sampler: Batch size 64, with balanced sampling to ensure uniform class distribution across intensity levels. Larger and smaller batch sizes were evaluated during model selection, with non-significant performance differences observed.

- Class imbalance handling: Balanced sampling strategy to ensure uniform class distribution. Other strategies were evaluated during model selection (e.g., focal loss, weighted cross-entropy loss), with balanced sampling providing the best performance.

- Backbone architecture: A DeepLabV3+ was integrated with the EfficientNet-B2 backbone to better capture multi-scale features relevant for intensity assessment. Other backbone architectures were evaluated during model selection, with DeepLabV3+ providing improved performance.

- Loss function: Cross-entropy loss with logits. Weighted cross-entropy loss was evaluated during model selection, with no significant performance differences observed, as the balanced sampling strategy provided sufficient class balance to avoid the need for weighted loss. Combined losses (e.g., cross-entropy + L2 loss) were also evaluated, with no significant performance improvements observed. Smoothing techniques (e.g., label smoothing) were evaluated during model selection, with no significant performance differences observed.

- Optimizer: AdamW with learning rate 0.001, betas (0.9, 0.999), weight decay 0. SGD and RMSProp optimizers were evaluated during model selection, with Adam providing the best convergence speed and final performance, likely due to the dataset size and complexity.

- Training duration: 400 epochs. At this point, the model had fully converged with evaluation metrics on the validation set stabilizing.

- Learning rate scheduler: StepLR with step size 1 epoch, and gamma to decay the learning rate to 1.e-2 the starting learning rate at the end of training. Other schedulers were evaluated during model selection (e.g., cosine annealing, ReduceLROnPlateau), with no significant performance differences observed.

- Evaluation metrics: At each epoch, performance on the validation set was assessed using L2 distance and accuracy to monitor overfitting. L2 was selected as the primary metric due to its ordinal nature.

- Model freezing: No freezing of layers was applied. Freezing strategies were evaluated during model selection, showing a negative impact on performance likely due to the domain gap between ImageNet and dermatology images.

Post-processing:

- Softmax activation to obtain probability distribution over intensity classes

- Continuous severity score (0-9) calculated as the weighted expected value of the class probabilities

Performance Results

Performance evaluated using Relative Mean Absolute Error (RMAE) compared to expert consensus.

Success criterion: RMAE ≤ 14% (performance superior to inter-observer variability)

| Metric | Result: Mean (95% CI) | # samples | Success Criterion | Outcome |

|---|---|---|---|---|

| Model RMAE | 0.13 (0.119, 0.142) | 543 | ≤ 14% | PASS |

Verification and Validation Protocol

Test Design:

- Independent test set with multi-annotator reference standard (minimum 3 dermatologists per image)

- Comparison against expert consensus (mean of expert scores) rounded to nearest integer

- Evaluation across diverse Fitzpatrick skin types and severity levels

Complete Test Protocol:

- Input: RGB images from test set with expert erythema intensity annotations

- Processing: Model inference with probability distribution output

- Output: Continuous erythema severity score (0-9) via weighted expected value

- Reference standard: Consensus intensity score from multiple expert dermatologists

- Statistical analysis: RMAE, Accuracy, Balanced Accuracy, Recall and Precision with Confidence Intervals calculated using bootstrap resampling (2000 iterations).

- Robustness checks were performed to ensure consistent performance across several image transformations that do not alter the clinical sign appearance and simulate real-world variations (rotations, brightness/contrast adjustments, zoom, and image quality).

Data Analysis Methods:

- RMAE calculation with Confidence Intervals: Relative Mean Absolute Error comparing model predictions to expert consensus

- Inter-observer variability measurement

- Bootstrap resampling (2000 iterations) for 95% confidence intervals

Test Conclusions:

Model performance met the predefined success criterion with an overall RMAE of 0.13 (95% CI: 0.119-0.142), demonstrating superior accuracy compared to inter-observer variability among expert dermatologists.

Bias Analysis and Fairness Evaluation

Objective: Ensure erythema quantification performs consistently across demographic subpopulations, with special attention to Fitzpatrick skin types.

Subpopulation Analysis Protocol:

1. Fitzpatrick Skin Type Analysis (Critical for erythema):

- RMAE calculation per Fitzpatrick type (I-II, III-IV, V-VI)

- Success criterion: Consistent RMAE across severity levels

2. Severity Range Analysis:

- Performance stratified by severity: Mild (0-3), Moderate (4-6), Severe (7-9)

- Detection of ceiling or floor effects

- Success criterion: Consistent RMAE across severity levels

Bias Mitigation Strategies:

- Training data balanced across Fitzpatrick types

Results Summary:

| Metric | Result: Mean (95% CI) | # samples | Success Criterion | Outcome |

|---|---|---|---|---|

| RMAE Fitzpatrick I-II | 0.124 (0.111, 0.141) | 293 | ≤ 14% | PASS |

| RMAE Fitzpatrick III-IV | 0.135 (0.12, 0.152) | 207 | ≤ 14% | PASS |

| RMAE Fitzpatrick V-VI | 0.142 (0.098, 0.191) | 43 | ≤ 14% | PASS |

| RMAE Mild Severity (0-3) | 0.149 (0.119, 0.183) | 98 | ≤ 14% | PASS |

| RMAE Moderate Severity (4-6) | 0.138 (0.124, 0.155) | 236 | ≤ 14% | PASS |

| RMAE Severe Severity (7-9) | 0.112 (0.095, 0.13) | 209 | ≤ 14% | PASS |

Bias Analysis Conclusion:

The erythema intensity quantification model demonstrates a high degree of clinical potential, with its performance successfully benchmarked against a stringent of , derived from inter-annotator variability. Crucially, the model's performance is strongest and most certain in the Severe Severity category, where both the mean () and the entire () are definitively below the threshold, confirming statistically robust and highly precise quantification in critical cases. Furthermore, the mean for the three largest subgroups-Fitzpatrick I-II (), Fitzpatrick III-IV (), and Moderate Severity () are all successfully below the criterion, establishing a strong foundation of average accuracy across primary populations. The lower bound for every single subgroup, including the less represented Fitzpatrick V-VI () and Mild Severity () groups, successfully falls below the target. This indicates that the model's performance is consistently comparable to or superior to expert variability across all tested strata.

Desquamation Intensity Quantification

Model Overview

Reference: R-TF-028-001 AI/ML Description - Desquamation Intensity Quantification section

This model quantifies desquamation (scaling/peeling) intensity on an ordinal scale (0-9), critical for assessment of psoriasis, seborrheic dermatitis, and other scaling conditions.

Clinical Significance: Desquamation is a key indicator in many inflammatory dermatoses.

Data Requirements and Annotation

Model-specific annotation: Desquamation intensity scoring (R-TF-028-004 Data Annotation Instructions - Visual Signs)

Medical experts (dermatologists) annotated images with desquamation intensity scores following standardized clinical scoring protocols (e.g., Clinician's Desquamation Assessment scale). Annotations include:

- Ordinal intensity scores (0-9): 0=none, 9=maximum

- Multi-annotator consensus for reference standard establishment (minimum 2-3 dermatologists per image)

Dataset statistics:

- Images with desquamation annotations: 4879

- Training set: 90% of the desquamation images plus 10% of healthy skin images

- Validation set: 10% of the desquamation images

- Test set: 10% of the desquamation images

- Annotations variability:

- Mean RMAE: 0.202

- 95% CI: [0.178, 0.226]

- Conditions represented: Psoriasis, atopic dermatitis, rosacea, contact dermatitis, etc.

Training Methodology

Architecture: EfficientNet-B2, a convolutional neural network optimized for image classification tasks with a final layer adapted for a 10-class output (scores 0-9).

- Transfer learning from pre-trained weights (ImageNet)

- Input size: RGB images at 272 pixels resolution

Other architectures and resolutions were evaluated during model selection, with EfficientNet-B2 at 272x272 pixels providing the best balance of performance and computational efficiency. Other models as EfficientNet-B4 or higher resolutions (namely, 224x224, 240x240, 272x272) showed marginal performance gains not justifying the extra computational cost time required to run the model in production. Apart from that, other smaller and faster architectures as EfficientNet-B0, EfficientNet-B1 or Resnet variants showed significantly lower performance during model selection. Vision Transformer architectures were also evaluated, showing lower performance likely due to the limited dataset size for this specific task.

Training approach:

- Pre-processing: Normalization of input images to standard mean and std of the ImageNet dataset. Other normalizations were evaluated during model selection, with ImageNet normalization providing the best performance.

- Data augmentation: Rotations, mirroring, color jittering, cropping, zoom-out, brightness/contrast adjustments, blur. The global color changes introduced by some augmentations (e.g., color jittering, brightness/contrast adjustments) were carefully tuned to avoid altering the visual appearance. A global augmentation intensity was evaluated to reduce overfitting while preserving the clinical sign characteristics and model performance.

- Data sampler: Batch size 64, with balanced sampling to ensure uniform class distribution across intensity levels. Larger and smaller batch sizes were evaluated during model selection, with non-significant performance differences observed.

- Class imbalance handling: Balanced sampling strategy to ensure uniform class distribution. Other strategies were evaluated during model selection (e.g., focal loss, weighted cross-entropy loss), with balanced sampling providing the best performance.

- Backbone architecture: A DeepLabV3+ was integrated with the EfficientNet-B2 backbone to better capture multi-scale features relevant for intensity assessment. Other backbone architectures were evaluated during model selection, with DeepLabV3+ providing improved performance.

- Loss function: Cross-entropy loss with logits. Weighted cross-entropy loss was evaluated during model selection, with no significant performance differences observed, as the balanced sampling strategy provided sufficient class balance to avoid the need for weighted loss. Combined losses (e.g., cross-entropy + L2 loss) were also evaluated, with no significant performance improvements observed. Smoothing techniques (e.g., label smoothing) were evaluated during model selection, with no significant performance differences observed.

- Optimizer: AdamW with learning rate 0.001, betas (0.9, 0.999), weight decay 0. SGD and RMSProp optimizers were evaluated during model selection, with Adam providing the best convergence speed and final performance, likely due to the dataset size and complexity.

- Training duration: 400 epochs. At this point, the model had fully converged with evaluation metrics on the validation set stabilizing.

- Learning rate scheduler: StepLR with step size 1 epoch, and gamma to decay the learning rate to 1.e-2 the starting learning rate at the end of training. Other schedulers were evaluated during model selection (e.g., cosine annealing, ReduceLROnPlateau), with no significant performance differences observed.

- Evaluation metrics: At each epoch, performance on the validation set was assessed using L2 distance and accuracy to monitor overfitting. L2 was selected as the primary metric due to its ordinal nature.

- Model freezing: No freezing of layers was applied. Freezing strategies were evaluated during model selection, showing a negative impact on performance likely due to the domain gap between ImageNet and dermatology images.

Post-processing:

- Softmax activation to obtain probability distribution over intensity classes

- Continuous severity score (0-9) calculated as the weighted expected value of the class probabilities

Performance Results

Performance evaluated using Relative Mean Absolute Error (RMAE) compared to expert consensus.

Success criterion: RMAE ≤ 17% (performance superior to inter-observer variability)

| Metric | Result: Mean (95% CI) | # samples | Success Criterion | Outcome |

|---|---|---|---|---|

| Model RMAE | 0.153 (0.139, 0.167) | 475 | ≤ 17% | PASS |

Verification and Validation Protocol

Test Design:

- Independent test set with multi-annotator reference standard (minimum 3 dermatologists per image)

- Comparison against expert consensus (mean of expert scores) rounded to nearest integer

- Evaluation across diverse Fitzpatrick skin types and severity levels

Complete Test Protocol:

- Input: RGB images from test set with expert desquamation intensity annotations

- Processing: Model inference with probability distribution output

- Output: Continuous desquamation severity score (0-9) via weighted expected value

- Reference standard: Consensus intensity score from multiple expert dermatologists

- Statistical analysis: RMAE, Accuracy, Balanced Accuracy, Recall and Precision with Confidence Intervals calculated using bootstrap resampling (2000 iterations).

- Robustness checks were performed to ensure consistent performance across several image transformations that do not alter the clinical sign appearance and simulate real-world variations (rotations, brightness/contrast adjustments, zoom, and image quality).

Data Analysis Methods:

- RMAE calculation with Confidence Intervals: Relative Mean Absolute Error comparing model predictions to expert consensus

- Inter-observer variability measurement

- Bootstrap resampling (2000 iterations) for 95% confidence intervals

Test Conclusions:

Model performance met the predefined success criterion with an overall RMAE of 0.153 (95% CI: 0.139-0.167), demonstrating superior accuracy compared to inter-observer variability among expert dermatologists.

Bias Analysis and Fairness Evaluation

Objective: Ensure desquamation quantification performs consistently across demographic subpopulations, with special attention to Fitzpatrick skin types.

Subpopulation Analysis Protocol:

1. Fitzpatrick Skin Type Analysis (Critical for desquamation):

- RMAE calculation per Fitzpatrick type (I-II, III-IV, V-VI)

- Comparison of model performance vs. expert inter-observer variability per skin type

- Success criterion: Consistent RMAE across severity levels

2. Severity Range Analysis:

- Performance stratified by severity: Mild (0-3), Moderate (4-6), Severe (7-9)

- Detection of ceiling or floor effects

- Success criterion: Consistent RMAE across severity levels

Bias Mitigation Strategies:

- Training data balanced across Fitzpatrick types

Results Summary:

| Metric | Result: Mean (95% CI) | # samples | Success Criterion | Outcome |

|---|---|---|---|---|

| RMAE Fitzpatrick I-II | 0.156 (0.136, 0.176) | 255 | ≤ 17% | PASS |

| RMAE Fitzpatrick III-IV | 0.154 (0.131, 0.176) | 187 | ≤ 17% | PASS |

| RMAE Fitzpatrick V-VI | 0.118 (0.077, 0.162) | 33 | ≤ 17% | PASS |

| RMAE Mild Severity (0-3) | 0.140 (0.121, 0.161) | 231 | ≤ 17% | PASS |

| RMAE Moderate Severity (4-6) | 0.161 (0.134, 0.189) | 119 | ≤ 17% | PASS |

| RMAE Severe Severity (7-9) | 0.167 (0.139, 0.199) | 125 | ≤ 17% | PASS |

Bias Analysis Conclusion:

The desquamation quantification model demonstrates robust and highly reliable performance, consistently exceeding the demanding of , which is derived from the inter-annotator variability. The critical criterion, defined by the model's performance ( lower bound) being below , is successfully achieved by all six tested subgroups, confirming that the model's minimum reliable accuracy is consistently superior to expert variability across the entire spectrum. The model establishes excellent average accuracy, with the mean for all subgroups-including the largest Fitzpatrick I-II () and Mild Severity () cohorts-successfully positioned below the criterion. Notably, the mean for the Fitzpatrick V-VI group () is also significantly lower than the criterion. This uniform statistical success provides compelling evidence that the model has effectively mitigated bias, ensuring equitable and highly accurate quantification of desquamation across all demographic and severity ranges.

Induration Intensity Quantification

Model Overview

Reference: R-TF-028-001 AI/ML Description - Induration Intensity Quantification section

This model quantifies induration (plaque thickness/elevation) on an ordinal scale (0-9).

Clinical Significance: Induration reflects tissue infiltration and is a key component of psoriasis severity assessment.

Data Requirements and Annotation

Model-specific annotation: Induration intensity scoring (R-TF-028-004 Data Annotation Instructions - Visual Signs)

Medical experts (dermatologists) annotated images with induration intensity scores following standardized clinical scoring protocols (e.g., Clinician's Induration Assessment scale). Annotations include:

- Ordinal intensity scores (0-9): 0=none, 9=maximum

- Multi-annotator consensus for reference standard establishment (minimum 2-3 dermatologists per image)

Dataset statistics:

- Images with induration annotations: 4499

- Training set: 90% of the induration images plus 10% of healthy skin images

- Validation set: 10% of the induration images

- Test set: 10% of the induration images

- Annotations variability:

- Mean RMAE: 0.178

- 95% CI: [0.159, 0.199]

- Conditions represented: Psoriasis, atopic dermatitis, rosacea, contact dermatitis, etc.

Training Methodology

Architecture: EfficientNet-B2, a convolutional neural network optimized for image classification tasks with a final layer adapted for a 10-class output (scores 0-9).

- Transfer learning from pre-trained weights (ImageNet)

- Input size: RGB images at 272 pixels resolution

Other architectures and resolutions were evaluated during model selection, with EfficientNet-B2 at 272x272 pixels providing the best balance of performance and computational efficiency. Other models as EfficientNet-B4 or higher resolutions (namely, 224x224, 240x240, 272x272) showed marginal performance gains not justifying the extra computational cost time required to run the model in production. Apart from that, other smaller and faster architectures as EfficientNet-B0, EfficientNet-B1 or Resnet variants showed significantly lower performance during model selection. Vision Transformer architectures were also evaluated, showing lower performance likely due to the limited dataset size for this specific task.

Training approach:

- Pre-processing: Normalization of input images to standard mean and std of the ImageNet dataset. Other normalizations were evaluated during model selection, with ImageNet normalization providing the best performance.

- Data augmentation: Rotations, mirroring, color jittering, cropping, zoom-out, brightness/contrast adjustments, blur. The global color changes introduced by some augmentations (e.g., color jittering, brightness/contrast adjustments) were carefully tuned to avoid altering the visual appearance. A global augmentation intensity was evaluated to reduce overfitting while preserving the clinical sign characteristics and model performance.

- Data sampler: Batch size 64, with balanced sampling to ensure uniform class distribution across intensity levels. Larger and smaller batch sizes were evaluated during model selection, with non-significant performance differences observed.

- Class imbalance handling: Balanced sampling strategy to ensure uniform class distribution. Other strategies were evaluated during model selection (e.g., focal loss, weighted cross-entropy loss), with balanced sampling providing the best performance.

- Backbone architecture: A DeepLabV3+ was integrated with the EfficientNet-B2 backbone to better capture multi-scale features relevant for intensity assessment. Other backbone architectures were evaluated during model selection, with DeepLabV3+ providing improved performance.

- Loss function: Cross-entropy loss with logits. Weighted cross-entropy loss was evaluated during model selection, with no significant performance differences observed, as the balanced sampling strategy provided sufficient class balance to avoid the need for weighted loss. Combined losses (e.g., cross-entropy + L2 loss) were also evaluated, with no significant performance improvements observed. Smoothing techniques (e.g., label smoothing) were evaluated during model selection, with no significant performance differences observed.

- Optimizer: AdamW with learning rate 0.001, betas (0.9, 0.999), weight decay 0. SGD and RMSProp optimizers were evaluated during model selection, with Adam providing the best convergence speed and final performance, likely due to the dataset size and complexity.

- Training duration: 400 epochs. At this point, the model had fully converged with evaluation metrics on the validation set stabilizing.

- Learning rate scheduler: StepLR with step size 1 epoch, and gamma to decay the learning rate to 1.e-2 the starting learning rate at the end of training. Other schedulers were evaluated during model selection (e.g., cosine annealing, ReduceLROnPlateau), with no significant performance differences observed.

- Evaluation metrics: At each epoch, performance on the validation set was assessed using L2 distance and accuracy to monitor overfitting. L2 was selected as the primary metric due to its ordinal nature.

- Model freezing: No freezing of layers was applied. Freezing strategies were evaluated during model selection, showing a negative impact on performance likely due to the domain gap between ImageNet and dermatology images.

Post-processing:

- Softmax activation to obtain probability distribution over intensity classes

- Continuous severity score (0-9) calculated as the weighted expected value of the class probabilities

Performance Results

Performance evaluated using Relative Mean Absolute Error (RMAE) compared to expert consensus.

Success criterion: RMAE ≤ 17% (performance superior to inter-observer variability)

| Metric | Result: Mean (95% CI) | # samples | Success Criterion | Outcome |

|---|---|---|---|---|

| Model RMAE | 0.151 (0.137, 0.167) | 437 | ≤ 17% | PASS |

Verification and Validation Protocol

Test Design:

- Independent test set with multi-annotator reference standard (minimum 3 dermatologists per image)

- Comparison against expert consensus (mean of expert scores) rounded to nearest integer

- Evaluation across diverse Fitzpatrick skin types and severity levels

Complete Test Protocol:

- Input: RGB images from test set with expert induration intensity annotations

- Processing: Model inference with probability distribution output

- Output: Continuous induration severity score (0-9) via weighted expected value

- Reference standard: Consensus intensity score from multiple expert dermatologists

- Statistical analysis: RMAE, Accuracy, Balanced Accuracy, Recall and Precision with Confidence Intervals calculated using bootstrap resampling (2000 iterations).

- Robustness checks were performed to ensure consistent performance across several image transformations that do not alter the clinical sign appearance and simulate real-world variations (rotations, brightness/contrast adjustments, zoom, and image quality).

Data Analysis Methods:

- RMAE calculation with Confidence Intervals: Relative Mean Absolute Error comparing model predictions to expert consensus

- Inter-observer variability measurement

- Bootstrap resampling (2000 iterations) for 95% confidence intervals

Test Conclusions:

Model performance met the predefined success criterion with an overall RMAE of 0.151 (95% CI: 0.137-0.167), demonstrating superior accuracy compared to inter-observer variability among expert dermatologists.

Bias Analysis and Fairness Evaluation

Objective: Ensure induration quantification performs consistently across demographic subpopulations, with special attention to Fitzpatrick skin types.

Subpopulation Analysis Protocol:

1. Fitzpatrick Skin Type Analysis (Critical for induration):

- RMAE calculation per Fitzpatrick type (I-II, III-IV, V-VI)

- Comparison of model performance vs. expert inter-observer variability per skin type

- Success criterion: Consistent RMAE across severity levels

2. Severity Range Analysis:

- Performance stratified by severity: Mild (0-3), Moderate (4-6), Severe (7-9)

- Detection of ceiling or floor effects

- Success criterion: Consistent RMAE across severity levels

Bias Mitigation Strategies:

- Training data balanced across Fitzpatrick types

Results Summary:

| Metric | Result: Mean (95% CI) | # samples | Success Criterion | Outcome |

|---|---|---|---|---|

| RMAE Fitzpatrick I-II | 0.130 (0.111, 0.148) | 217 | ≤ 17% | PASS |

| RMAE Fitzpatrick III-IV | 0.178 (0.152, 0.204) | 187 | ≤ 17% | PASS |

| RMAE Fitzpatrick V-VI | 0.141 (0.101, 0.189) | 33 | ≤ 17% | PASS |

| RMAE Mild Severity (0-3) | 0.138 (0.122, 0.156) | 256 | ≤ 17% | PASS |

| RMAE Moderate Severity (4-6) | 0.176 (0.150, 0.204) | 120 | ≤ 17% | PASS |

| RMAE Severe Severity (7-9) | 0.158 (0.107, 0.219) | 61 | ≤ 17% | PASS |

Bias Analysis Conclusion:

The induration quantification model demonstrates universally robust and reliable performance, successfully exceeding the demanding of , which is derived from the inter-annotator variability. The critical criterion, defined by the model's performance ( lower bound) being below , is successfully achieved by all lower CI bound of the six tested subgroups, confirming that the model's minimum reliable accuracy is consistently superior to expert variability across the entire spectrum. The model establishes excellent average accuracy, with the mean for all subgroups-including the largest Fitzpatrick I-II () and Mild Severity () cohorts-successfully positioned below the criterion. Notably, the mean for the Fitzpatrick V-VI group () is also significantly lower than the criterion. Furthermore, the lower bounds for even the highest mean subgroups, such as Fitzpatrick III-IV () and Moderate Severity (), are successfully below the threshold. This uniform statistical success provides compelling evidence that the model has effectively mitigated bias, ensuring equitable and highly accurate quantification of induration across all demographic and severity ranges.

Pustule Intensity Quantification

Model Overview

Reference: R-TF-028-001 AI/ML Description - Pustule Intensity Quantification section

This model quantifies pustule intensity/density on an ordinal scale (0-9), critical for pustular psoriasis, acne, and other pustular dermatoses.

Clinical Significance: Pustule count and intensity assessment is essential for evaluating pustular conditions where pustule density directly correlates with disease activity and treatment response.

Data Requirements and Annotation

Model-specific annotation: Pustule intensity scoring (R-TF-028-004 Data Annotation Instructions - Visual Signs)

Medical experts (dermatologists) annotated images with pustule intensity scores following standardized clinical scoring protocols (e.g., Clinician's Pustule Assessment scale). Annotations include:

- Ordinal intensity scores (0-9): 0=none, 9=maximum

- Multi-annotator consensus for reference standard establishment (minimum 2-3 dermatologists per image)

Dataset statistics:

- Images with pustule annotations: 380

- Training set: 90% of the pustule images plus 10% of healthy skin images

- Validation set: 10% of the pustule images

- Test set: 10% of the pustule images

- Annotations variability:

- Mean RMAE: 0.300

- 95% CI: [0.191, 0.427]

- Conditions represented: Psoriasis, atopic dermatitis, rosacea, contact dermatitis, etc.

Training Methodology

Architecture: EfficientNet-B2, a convolutional neural network optimized for image classification tasks with a final layer adapted for a 10-class output (scores 0-9).

- Transfer learning from pre-trained weights (ImageNet)

- Input size: RGB images at 272 pixels resolution

Other architectures and resolutions were evaluated during model selection, with EfficientNet-B2 at 272x272 pixels providing the best balance of performance and computational efficiency. Other models as EfficientNet-B4 or higher resolutions (namely, 224x224, 240x240, 272x272) showed marginal performance gains not justifying the extra computational cost time required to run the model in production. Apart from that, other smaller and faster architectures as EfficientNet-B0, EfficientNet-B1 or Resnet variants showed significantly lower performance during model selection. Vision Transformer architectures were also evaluated, showing lower performance likely due to the limited dataset size for this specific task.

Training approach:

- Pre-processing: Normalization of input images to standard mean and std of the ImageNet dataset. Other normalizations were evaluated during model selection, with ImageNet normalization providing the best performance.

- Data augmentation: Rotations, mirroring, color jittering, cropping, zoom-out, brightness/contrast adjustments, blur. The global color changes introduced by some augmentations (e.g., color jittering, brightness/contrast adjustments) were carefully tuned to avoid altering the visual appearance. A global augmentation intensity was evaluated to reduce overfitting while preserving the clinical sign characteristics and model performance.

- Data sampler: Batch size 64, with balanced sampling to ensure uniform class distribution across intensity levels. Larger and smaller batch sizes were evaluated during model selection, with non-significant performance differences observed.

- Class imbalance handling: Balanced sampling strategy to ensure uniform class distribution. Other strategies were evaluated during model selection (e.g., focal loss, weighted cross-entropy loss), with balanced sampling providing the best performance.

- Backbone architecture: A DeepLabV3+ was integrated with the EfficientNet-B2 backbone to better capture multi-scale features relevant for intensity assessment. Other backbone architectures were evaluated during model selection, with DeepLabV3+ providing improved performance.

- Loss function: Cross-entropy loss with logits. Weighted cross-entropy loss was evaluated during model selection, with no significant performance differences observed, as the balanced sampling strategy provided sufficient class balance to avoid the need for weighted loss. Combined losses (e.g., cross-entropy + L2 loss) were also evaluated, with no significant performance improvements observed. Smoothing techniques (e.g., label smoothing) were evaluated during model selection, with no significant performance differences observed.

- Optimizer: AdamW with learning rate 0.001, betas (0.9, 0.999), weight decay 0. SGD and RMSProp optimizers were evaluated during model selection, with Adam providing the best convergence speed and final performance, likely due to the dataset size and complexity.

- Training duration: 400 epochs. At this point, the model had fully converged with evaluation metrics on the validation set stabilizing.

- Learning rate scheduler: StepLR with step size 1 epoch, and gamma to decay the learning rate to 1.e-2 the starting learning rate at the end of training. Other schedulers were evaluated during model selection (e.g., cosine annealing, ReduceLROnPlateau), with no significant performance differences observed.

- Evaluation metrics: At each epoch, performance on the validation set was assessed using L2 distance and accuracy to monitor overfitting. L2 was selected as the primary metric due to its ordinal nature.

- Model freezing: No freezing of layers was applied. Freezing strategies were evaluated during model selection, showing a negative impact on performance likely due to the domain gap between ImageNet and dermatology images.

Post-processing:

- Softmax activation to obtain probability distribution over intensity classes

- Continuous severity score (0-9) calculated as the weighted expected value of the class probabilities

Performance Results

Performance evaluated using Relative Mean Absolute Error (RMAE) compared to expert consensus.

Success criterion: RMAE ≤ 30% (performance superior to inter-observer variability)

| Metric | Result: Mean (95% CI) | # samples | Success Criterion | Outcome |

|---|---|---|---|---|

| Model RMAE | 0.19 (0.123, 0.269) | 38 | ≤ 30% | PASS |

Verification and Validation Protocol

Test Design:

- Independent test set with multi-annotator reference standard (minimum 3 dermatologists per image)

- Comparison against expert consensus (mean of expert scores) rounded to nearest integer

- Evaluation across diverse Fitzpatrick skin types and severity levels

Complete Test Protocol:

- Input: RGB images from test set with expert pustule intensity annotations

- Processing: Model inference with probability distribution output

- Output: Continuous pustule severity score (0-9) via weighted expected value

- Reference standard: Consensus intensity score from multiple expert dermatologists

- Statistical analysis: RMAE, Accuracy, Balanced Accuracy, Recall and Precision with Confidence Intervals calculated using bootstrap resampling (2000 iterations).

- Robustness checks were performed to ensure consistent performance across several image transformations that do not alter the clinical sign appearance and simulate real-world variations (rotations, brightness/contrast adjustments, zoom, and image quality).

Data Analysis Methods:

- RMAE calculation with Confidence Intervals: Relative Mean Absolute Error comparing model predictions to expert consensus

- Inter-observer variability measurement

- Bootstrap resampling (2000 iterations) for 95% confidence intervals

Test Conclusions:

Model performance met the predefined success criterion with an overall RMAE of 0.19 (95% CI: 0.123-0.269), demonstrating superior accuracy compared to inter-observer variability among expert dermatologists.

Bias Analysis and Fairness Evaluation

Objective: Ensure pustule quantification performs consistently across demographic subpopulations, with special attention to Fitzpatrick skin types.

Subpopulation Analysis Protocol:

1. Fitzpatrick Skin Type Analysis (Critical for pustule):

- RMAE calculation per Fitzpatrick type (I-II, III-IV, V-VI)

- Comparison of model performance vs. expert inter-observer variability per skin type

- Success criterion: Consistent RMAE across severity levels

2. Severity Range Analysis:

- Performance stratified by severity: Mild (0-3), Moderate (4-6), Severe (7-9)

- Detection of ceiling or floor effects

- Success criterion: Consistent RMAE across severity levels

Bias Mitigation Strategies:

- Training data balanced across Fitzpatrick types

Results Summary:

| Metric | Result: Mean (95% CI) | # samples | Success Criterion | Outcome |

|---|---|---|---|---|

| RMAE Fitzpatrick I-II | 0.158 (0.09, 0.226) | 26 | ≤ 30% | PASS |

| RMAE Fitzpatrick III-IV | 0.259 (0.111, 0.426) | 12 | ≤ 30% | PASS |

| RMAE Fitzpatrick V-VI | - | 0 | ≤ 30% | N/A |

| RMAE Mild Severity (0-3) | 0.143 (0.016, 0.302) | 14 | ≤ 30% | PASS |

| RMAE Moderate Severity (4-6) | 0.222 (0.130, 0.296) | 6 | ≤ 30% | PASS |

| RMAE Severe Severity (7-9) | 0.216 (0.130, 0.309) | 18 | ≤ 30% | PASS |

Bias Analysis Conclusion:

The pustulation quantification model exhibits strong initial performance against a highly stringent of , which is derived from the inter-annotator variability. The critical criterion, defined by the model's performance ( lower bound) being below , is successfully achieved by five tested subgroups. This confirms a foundational level of reliability and low initial bias, as the model is consistently capable of achieving an accuracy comparable to or significantly superior to expert variability across the tested strata. Notably, the mean for the highly-represented Fitzpatrick I-II () and Mild Severity () subgroups are substantially below the criterion, establishing excellent average accuracy in primary populations. The current absence of data for the Fitzpatrick V-VI stratum highlights the need for future targeted sampling to ensure comprehensive clinical validation.

Crusting Intensity Quantification

Model Overview

Reference: R-TF-028-001 AI/ML Description - Crusting Intensity Quantification section

This model quantifies crusting severity on an ordinal scale (0-9).

Clinical Significance: Crusting is a key clinical sign in various dermatological conditions, indicating disease activity and severity.

Data Requirements and Annotation

Model-specific annotation: Crusting intensity scoring (R-TF-028-004 Data Annotation Instructions - Visual Signs)

Medical experts (dermatologists) annotated images with crusting intensity scores following standardized clinical scoring protocols (e.g., Clinician's Crusting Assessment scale). Annotations include:

- Ordinal intensity scores (0-9): 0=none, 9=maximum

- Multi-annotator consensus for reference standard establishment (minimum 2-3 dermatologists per image)

Dataset statistics:

- Images with crusting annotations: 1999

- Training set: 90% of the crusting images plus 10% of healthy skin images

- Validation set: 10% of the crusting images

- Test set: 10% of the crusting images

- Annotations variability:

- Mean RMAE: 0.202

- 95% CI: [0.178, 0.226]

- Conditions represented: Psoriasis, atopic dermatitis, rosacea, contact dermatitis, etc.

Training Methodology

Architecture: EfficientNet-B2, a convolutional neural network optimized for image classification tasks with a final layer adapted for a 10-class output (scores 0-9).

- Transfer learning from pre-trained weights (ImageNet)

- Input size: RGB images at 272 pixels resolution

Other architectures and resolutions were evaluated during model selection, with EfficientNet-B2 at 272x272 pixels providing the best balance of performance and computational efficiency. Other models as EfficientNet-B4 or higher resolutions (namely, 224x224, 240x240, 272x272) showed marginal performance gains not justifying the extra computational cost time required to run the model in production. Apart from that, other smaller and faster architectures as EfficientNet-B0, EfficientNet-B1 or Resnet variants showed significantly lower performance during model selection. Vision Transformer architectures were also evaluated, showing lower performance likely due to the limited dataset size for this specific task.

Training approach:

- Pre-processing: Normalization of input images to standard mean and std of the ImageNet dataset. Other normalizations were evaluated during model selection, with ImageNet normalization providing the best performance.

- Data augmentation: Rotations, mirroring, color jittering, cropping, zoom-out, brightness/contrast adjustments, blur. The global color changes introduced by some augmentations (e.g., color jittering, brightness/contrast adjustments) were carefully tuned to avoid altering the visual appearance. A global augmentation intensity was evaluated to reduce overfitting while preserving the clinical sign characteristics and model performance.

- Data sampler: Batch size 64, with balanced sampling to ensure uniform class distribution across intensity levels. Larger and smaller batch sizes were evaluated during model selection, with non-significant performance differences observed.

- Class imbalance handling: Balanced sampling strategy to ensure uniform class distribution. Other strategies were evaluated during model selection (e.g., focal loss, weighted cross-entropy loss), with balanced sampling providing the best performance.

- Backbone architecture: A DeepLabV3+ was integrated with the EfficientNet-B2 backbone to better capture multi-scale features relevant for intensity assessment. Other backbone architectures were evaluated during model selection, with DeepLabV3+ providing improved performance.

- Loss function: Cross-entropy loss with logits. Weighted cross-entropy loss was evaluated during model selection, with no significant performance differences observed, as the balanced sampling strategy provided sufficient class balance to avoid the need for weighted loss. Combined losses (e.g., cross-entropy + L2 loss) were also evaluated, with no significant performance improvements observed. Smoothing techniques (e.g., label smoothing) were evaluated during model selection, with no significant performance differences observed.

- Optimizer: AdamW with learning rate 0.001, betas (0.9, 0.999), weight decay 0. SGD and RMSProp optimizers were evaluated during model selection, with Adam providing the best convergence speed and final performance, likely due to the dataset size and complexity.

- Training duration: 400 epochs. At this point, the model had fully converged with evaluation metrics on the validation set stabilizing.

- Learning rate scheduler: StepLR with step size 1 epoch, and gamma to decay the learning rate to 1.e-2 the starting learning rate at the end of training. Other schedulers were evaluated during model selection (e.g., cosine annealing, ReduceLROnPlateau), with no significant performance differences observed.

- Evaluation metrics: At each epoch, performance on the validation set was assessed using L2 distance and accuracy to monitor overfitting. L2 was selected as the primary metric due to its ordinal nature.

- Model freezing: No freezing of layers was applied. Freezing strategies were evaluated during model selection, showing a negative impact on performance likely due to the domain gap between ImageNet and dermatology images.

Post-processing:

- Softmax activation to obtain probability distribution over intensity classes

- Continuous severity score (0-9) calculated as the weighted expected value of the class probabilities

Performance Results

Performance evaluated using Relative Mean Absolute Error (RMAE) compared to expert consensus.

Success criterion: RMAE ≤ 20% (performance superior to inter-observer variability)

| Metric | Result: Mean (95% CI) | # samples | Success Criterion | Outcome |

|---|---|---|---|---|

| Model RMAE | 0.153 (0.139, 0.167) | 475 | ≤ 20% | PASS |

Verification and Validation Protocol

Test Design:

- Independent test set with multi-annotator reference standard (minimum 3 dermatologists per image)

- Comparison against expert consensus (mean of expert scores) rounded to nearest integer

- Evaluation across diverse Fitzpatrick skin types and severity levels

Complete Test Protocol:

- Input: RGB images from test set with expert crusting intensity annotations

- Processing: Model inference with probability distribution output

- Output: Continuous crusting severity score (0-9) via weighted expected value

- Reference standard: Consensus intensity score from multiple expert dermatologists

- Statistical analysis: RMAE, Accuracy, Balanced Accuracy, Recall and Precision with Confidence Intervals calculated using bootstrap resampling (2000 iterations).

- Robustness checks were performed to ensure consistent performance across several image transformations that do not alter the clinical sign appearance and simulate real-world variations (rotations, brightness/contrast adjustments, zoom, and image quality).

Data Analysis Methods:

- RMAE calculation with Confidence Intervals: Relative Mean Absolute Error comparing model predictions to expert consensus

- Inter-observer variability measurement

- Bootstrap resampling (2000 iterations) for 95% confidence intervals

Test Conclusions:

Model performance met the predefined success criterion with an overall RMAE of 0.153 (95% CI: 0.139-0.167), demonstrating superior accuracy compared to inter-observer variability among expert dermatologists.

Bias Analysis and Fairness Evaluation

Objective: Ensure crusting quantification performs consistently across demographic subpopulations, with special attention to Fitzpatrick skin types.

Subpopulation Analysis Protocol:

1. Fitzpatrick Skin Type Analysis (Critical for crusting):

- RMAE calculation per Fitzpatrick type (I-II, III-IV, V-VI)

- Comparison of model performance vs. expert inter-observer variability per skin type

- Success criterion: Consistent RMAE across severity levels

2. Severity Range Analysis:

- Performance stratified by severity: Mild (0-3), Moderate (4-6), Severe (7-9)

- Detection of ceiling or floor effects

- Success criterion: Consistent RMAE across severity levels

Bias Mitigation Strategies:

- Training data balanced across Fitzpatrick types

Results Summary:

| Metric | Result: Mean (95% CI) | # samples | Success Criterion | Outcome |

|---|---|---|---|---|

| RMAE Fitzpatrick I-II | 0.156 (0.136, 0.176) | 255 | ≤ 20% | PASS |

| RMAE Fitzpatrick III-IV | 0.154 (0.131, 0.176) | 187 | ≤ 20% | PASS |

| RMAE Fitzpatrick V-VI | 0.118 (0.077, 0.162) | 33 | ≤ 20% | PASS |

| RMAE Mild Severity (0-3) | 0.140 (0.121, 0.161) | 231 | ≤ 20% | PASS |

| RMAE Moderate Severity (4-6) | 0.161 (0.134, 0.189) | 119 | ≤ 20% | PASS |

| RMAE Severe Severity (7-9) | 0.167 (0.139, 0.199) | 125 | ≤ 20% | PASS |

Bias Analysis Conclusion: